【NVIDIA AI-AGENT夏季训练营-进阶版】

wang@lin 2024-08-26 11:31:02 阅读 64

NVIDIA AI-AGENT夏季训练营

项目名称:AI-AGENT夏季训练营 — RAG智能对话机器人

报告日期:2024年8月18日

项目负责人:[wangaolin]

项目概述(必写):

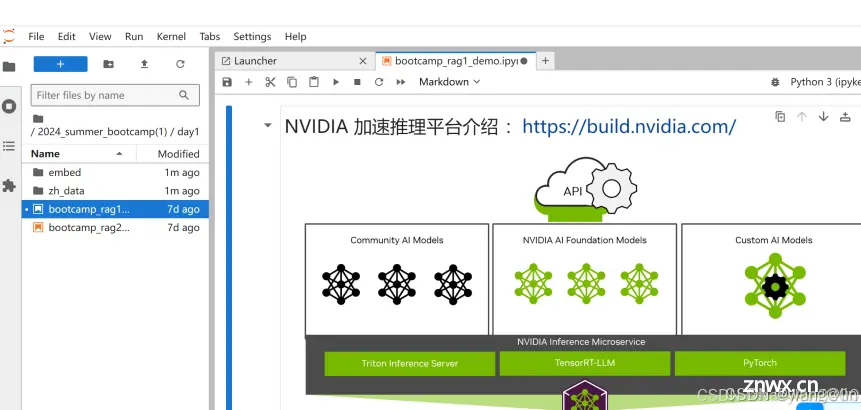

进阶版项目实操部分整体情况:首先介绍了多模态模型基于 NIM 的调用方式,然后讲解了基于 NIM 接口实现 Phi-3-Vision 的推理实践,最后说明了基于 Gradio 框架建立前端互动界面方法。

应用场景与亮点: 基于 NIM 建构多模态 AI-Agent 项目 小而精,适合小白入门。简明展示了多模态ai-agent构建的过程。适合各个场景,如图像文字交互的场景,生成ppt图片。主要亮点是小而精,入门简单。

技术方案与实施步骤

项目选择

选择microsoft/phi-3-vision-128k-instruct模型,主要是由于改模型小而精,运行速度快,适合工程化应用。

数据的构建(必写):

1)使用Image读入图片

2)将编码后的图像按照格式给到Microsoft Phi 3 vision , 利用其强大能力解析图片中的数据–调用Llama3 70b的模型,通过中文输入提示词,来进行工作

3)将处理好的提示词输入给char_reading, 也就是microsoft/phi-3-vision大模型来进行数据分析, 得到我们需要的表格或者说table变量

4)将Phi3 vision处理好的table和提示词输入给另一个大模型llama3.1, 修改数据并生成代码

5)生成的代码通过上面的执行函数来执行python代码, 并得到结果

功能整合(进阶版RAG必填):

首先定义了提示词模板, chart_reading_prompt, 我们输入的图片会边恒base64格式的string传输给它将处理好的提示词输入给char_reading, 也就是microsoft/phi-3-vision大模型来进行数据分析, 得到我们需要的表格或者说table变量将Phi3 vision处理好的table和提示词输入给另一个大模型llama3.1, 修改数据并生成代码将生成的代码通过上面的执行函数来执行python代码, 并得到结果将多模态智能体封装进Gradio

实施步骤:

环境搭建(必写): 描述开发环境的搭建过程,包括必要的软件、库的安装与配置。

1)创建Python环境

首先需要安装Miniconda:

#这里如果你原来装过anaconda就不用再装Miniconda了。

在打开的终端中按照下面的步骤执行,配置环境:

创建python 3.8虚拟环境

conda create --name ai_endpoint python=3.8

#这里是3.8及以上版本就行

进入虚拟环境

conda activate ai_endpoint

安装nvidia_ai_endpoint工具

#这是langchai和NIM结合的工具库

pip install langchain-nvidia-ai-endpoints

安装Jupyter Lab

pip install jupyterlab

安装langchain_core

pip install langchain_core

安装langchain

pip install langchain

安装matplotlib

#涉及数据可视化和图表绘制

pip install matplotlib

安装Numpy

pip install numpy

#安装faiss, 这里如果没有GPU可以安装CPU版本

pip install faiss-cpu==1.7.2

安装OPENAI库

pip install openai

#另外这里还缺两个安装包

一个是gradio一个是langchain-community。

pip install gradio -i http://mirrors.aliyun.com/pypi/simple --trusted-host mirrors.aliyun.com

pip install langchain-community -i http://mirrors.aliyun.com/pypi/simple --trusted-host mirrors.aliyun.com

2)利用Jupyter Lab打开课件执行

代码实现(必写):

1.rag

Step 1 - 使用NVIDIA_API_KEY¶

import getpass

import os

if os.environ.get(“NVIDIA_API_KEY”, “”).startswith(“nvapi-”):

print(“Valid NVIDIA_API_KEY already in environment. Delete to reset”)

else:

nvapi_key = getpass.getpass(“NVAPI Key (starts with nvapi-): “)

assert nvapi_key.startswith(“nvapi-”), f”{nvapi_key[:5]}… is not a valid key”

os.environ[“NVIDIA_API_KEY”] = nvapi_key

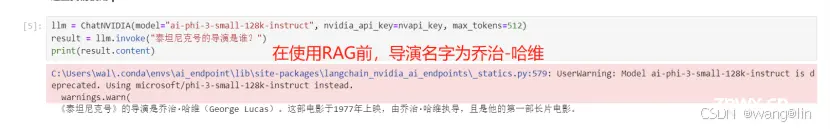

Step 2 - 初始化SLM

llm = ChatNVIDIA(model=“ai-phi-3-small-128k-instruct”, nvidia_api_key=nvapi_key, max_tokens=512)

result = llm.invoke(“泰坦尼克号的导演是谁?”)

print(result.content)

Step 3 - 初始化ai-embed-qa-4向量模型

from langchain_nvidia_ai_endpoints import NVIDIAEmbeddings

embedder = NVIDIAEmbeddings(model=“ai-embed-qa-4”)

Step 4 - 获取文本数据集

import os

from tqdm import tqdm

from pathlib import Path

Here we read in the text data and prepare them into vectorstore

ps = os.listdir(“./zh_data/”)

data = []

sources = []

for p in ps:

if p.endswith(‘.txt’):

path2file=“./zh_data/”+p

with open(path2file,encoding=“utf-8”) as f:

lines=f.readlines()

for line in lines:

if len(line)>=1:

data.append(line)

sources.append(path2file)

Step 5 - 进行一些基本的清理并删除空行

documents=[d for d in data if d != ‘\n’]

len(data), len(documents), data[0]

Step 6a - 将文档处理到 faiss vectorstore 并将其保存到磁盘

Here we create a vector store from the documents and save it to disk.

from operator import itemgetter

from langchain.vectorstores import FAISS

from langchain_core.output_parsers import StrOutputParser

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.runnables import RunnablePassthrough

from langchain.text_splitter import CharacterTextSplitter

from langchain_nvidia_ai_endpoints import ChatNVIDIA

import faiss

只需要执行一次,后面可以重读已经保存的向量存储

text_splitter = CharacterTextSplitter(chunk_size=400, separator=" ")

docs = []

metadatas = []

for i, d in enumerate(documents):

splits = text_splitter.split_text(d)

#print(len(splits))

docs.extend(splits)

metadatas.extend([{“source”: sources[i]}] * len(splits))

store = FAISS.from_texts(docs, embedder , metadatas=metadatas)

store.save_local(‘./zh_data/nv_embedding’)

Step 6b - 重读之前处理并保存的 Faiss Vectore 存储

Load the vectorestore back.

store = FAISS.load_local(“./zh_data/nv_embedding”, embedder,allow_dangerous_deserialization=True)

Step 7- 提出问题并基于phi-3-small-128k-instruct模型进行RAG检索

retriever = store.as_retriever()

prompt = ChatPromptTemplate.from_messages(

[

(

“system”,

“Answer solely based on the following context:\n\n{context}\n”,

),

(“user”, “{question}”),

]

)

chain = (

{“context”: retriever, “question”: RunnablePassthrough()}

| prompt

| llm

| StrOutputParser()

)

chain.invoke(“泰坦尼克号的导演是谁?”)

2.ai-agent

第一步, 导入工具包

from langchain_nvidia_ai_endpoints import ChatNVIDIA

from langchain_core.output_parsers import StrOutputParser

from langchain_core.prompts import ChatPromptTemplate

from langchain.schema.runnable import RunnableLambda

from langchain.schema.runnable.passthrough import RunnableAssign

from langchain_core.runnables import RunnableBranch

from langchain_core.runnables import RunnablePassthrough

from langchain.chains import ConversationChain

from langchain.memory import ConversationBufferMemory

import os

import base64

import matplotlib.pyplot as plt

import numpy as np

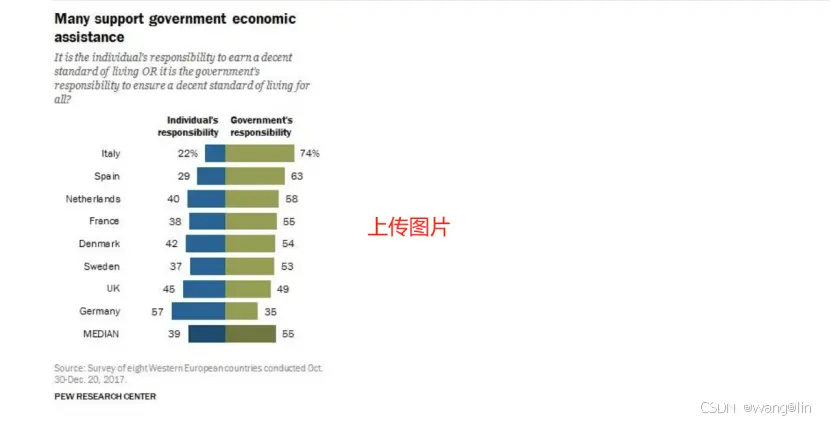

第二步, 利用Microsoft Phi 3 vision 来解析图片数据

def image2b64(image_file):

with open(image_file, “rb”) as f:

image_b64 = base64.b64encode(f.read()).decode()

return image_b64

image_b64 = image2b64(“economic-assistance-chart.png”)

image_b64 = image2b64(“eco-good-bad-chart.png”)

from PIL import Image

display(Image.open(“economic-assistance-chart.png”))

instruct_chat = ChatNVIDIA(model=“meta/llama3-70b-instruct”)

result = instruct_chat.invoke(‘How to implement Fibonacci in python using dynamic programming’)

result = instruct_chat.invoke(‘怎么用 Python 实现快速排序’)

print(result.content)

第三步, 使用 LangChain 构建多模态智能体

import re

将 langchain 运行状态下的表保存到全局变量中

def save_table_to_global(x):

global table

if ‘TABLE’ in x.content:

table = x.content.split(‘TABLE’, 1)[1].split(‘END_TABLE’)[0]

return x

helper function 用于Debug

def print_and_return(x):

print(x)

return x

对打模型生成的代码进行处理, 将注释或解释性文字去除掉, 留下pyhon代码

def extract_python_code(text):

pattern = r’python\s*(.*?)\s*’

matches = re.findall(pattern, text, re.DOTALL)

return [match.strip() for match in matches]

执行由大模型生成的代码

def execute_and_return(x):

code = extract_python_code(x.content)[0]

try:

result = exec(str(code))

#print("exec result: "+result)

except ExceptionType:

print(“The code is not executable, don’t give up, try again!”)

return x

将图片编码成base64格式, 以方便输入给大模型

def image2b64(image_file):

with open(image_file, “rb”) as f:

image_b64 = base64.b64encode(f.read()).decode()

def chart_agent(image_b64, user_input, table):

# Chart reading Runnable

chart_reading = ChatNVIDIA(model=“microsoft/phi-3-vision-128k-instruct”)

chart_reading_prompt = ChatPromptTemplate.from_template(

‘Generate underlying data table of the figure below, :

’

)

chart_chain = chart_reading_prompt | chart_reading

<code># Instruct LLM Runnable

# instruct_chat = ChatNVIDIA(model="nv-mistralai/mistral-nemo-12b-instruct")code>

# instruct_chat = ChatNVIDIA(model="meta/llama-3.1-8b-instruct")code>

#instruct_chat = ChatNVIDIA(model="ai-llama3-70b")code>

instruct_chat = ChatNVIDIA(model="meta/llama-3.1-405b-instruct")code>

instruct_prompt = ChatPromptTemplate.from_template(

"Do NOT repeat my requirements already stated. Based on this table {table}, {input}" \

"If has table string, start with 'TABLE', end with 'END_TABLE'." \

"If has code, start with '```python' and end with '```'." \

"Do NOT include table inside code, and vice versa."

)

instruct_chain = instruct_prompt | instruct_chat

# 根据“表格”决定是否读取图表

chart_reading_branch = RunnableBranch(

(lambda x: x.get('table') is None, RunnableAssign({'table': chart_chain })),

(lambda x: x.get('table') is not None, lambda x: x),

lambda x: x

)

# 根据需求更新table

update_table = RunnableBranch(

(lambda x: 'TABLE' in x.content, save_table_to_global),

lambda x: x

)

# 执行绘制图表的代码

execute_code = RunnableBranch(

(lambda x: '```python' in x.content, execute_and_return),

lambda x: x

)

chain = (

chart_reading_branch

#| RunnableLambda(print_and_return)

| instruct_chain

#| RunnableLambda(print_and_return)

| update_table

| execute_code

)

return chain.invoke({“image_b64”: image_b64, “input”: user_input, “table”: table}).content

使用全局变量 table 来存储数据

table = None

将要处理的图像转换成base64格式

image_b64 = image2b64(“economic-assistance-chart.png”)

#展示读取的图片

from PIL import Image

display(Image.open(“economic-assistance-chart.png”))

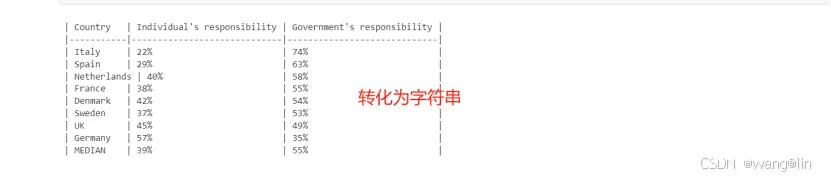

user_input = “show this table in string”

chart_agent(image_b64, user_input, table)

print(table) # let’s see what ‘table’ looks like now

user_input = “replace table string’s ‘UK’ with ‘United Kingdom’”

chart_agent(image_b64, user_input, table)

print(table) # let’s see what ‘table’ looks like now

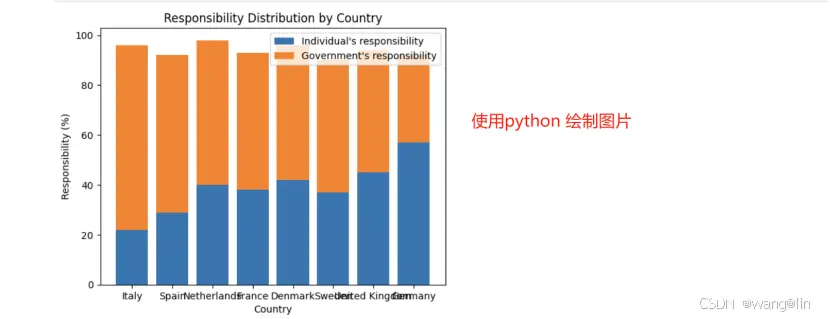

user_input = “draw this table as stacked bar chart in python”

result = chart_agent(image_b64, user_input, table)

print("result: "+result) # let’s see what ‘table’ looks like now

第四步, 将多模态智能体封装进Gradio

global img_path

img_path = ‘C:\Users\wal\Desktop\NVIDIA rag\’+‘image.png’

print(img_path)

def execute_and_return_gr(x):

code = extract_python_code(x.content)[0]

try:

result = exec(str(code))

#print("exec result: "+result)

except ExceptionType:

print(“The code is not executable, don’t give up, try again!”)

return img_path

def chart_agent_gr(image_b64, user_input, table):

image_b64 = image2b64(image_b64)

# Chart reading Runnable

chart_reading = ChatNVIDIA(model="microsoft/phi-3-vision-128k-instruct")code>

chart_reading_prompt = ChatPromptTemplate.from_template(

'Generate underlying data table of the figure below, : <img src="data:image/png;base64,{image_b64}" />'code>

)

chart_chain = chart_reading_prompt | chart_reading

# Instruct LLM Runnable

# instruct_chat = ChatNVIDIA(model="nv-mistralai/mistral-nemo-12b-instruct")code>

# instruct_chat = ChatNVIDIA(model="meta/llama-3.1-8b-instruct")code>

#instruct_chat = ChatNVIDIA(model="ai-llama3-70b")code>

instruct_chat = ChatNVIDIA(model="meta/llama-3.1-405b-instruct")code>

instruct_prompt = ChatPromptTemplate.from_template(

"Do NOT repeat my requirements already stated. Based on this table {table}, {input}" \

"If has table string, start with 'TABLE', end with 'END_TABLE'." \

"If has code, start with '```python' and end with '```'." \

"Do NOT include table inside code, and vice versa."

)

instruct_chain = instruct_prompt | instruct_chat

# 根据“表格”决定是否读取图表

chart_reading_branch = RunnableBranch(

(lambda x: x.get('table') is None, RunnableAssign({'table': chart_chain })),

(lambda x: x.get('table') is not None, lambda x: x),

lambda x: x

)

# 根据需求更新table

update_table = RunnableBranch(

(lambda x: 'TABLE' in x.content, save_table_to_global),

lambda x: x

)

execute_code = RunnableBranch(

(lambda x: '```python' in x.content, execute_and_return_gr),

lambda x: x

)

# 执行绘制图表的代码

chain = (

chart_reading_branch

| RunnableLambda(print_and_return)

| instruct_chain

| RunnableLambda(print_and_return)

| update_table

| execute_code

)

return chain.invoke({"image_b64": image_b64, "input": user_input, "table": table})

user_input = "replace table string’s ‘UK’ with ‘United Kingdom’, draw this table as stacked bar chart in python, and save the image in path: "+img_path

print(user_input)

import gradio as gr

multi_modal_chart_agent = gr.Interface(fn=chart_agent_gr,

inputs=[gr.Image(label=“Upload image”, type=“filepath”), ‘text’],

outputs=[‘image’],

title=“Multi Modal chat agent”,

description=“Multi Modal chat agent”,

allow_flagging=“never”)

multi_modal_chart_agent.launch(debug=True, share=False, show_api=False, server_port=5000, server_name=“0.0.0.0”)

multi_modal_chart_agent.launch(debug=True, share=False, show_api=False, server_port=8888, server_name=“0.0.0.0”)

项目成果与展示:

应用场景展示: 描述对话机器人的具体应用场景,如客户服务、教育辅导等。

功能演示: 列出并展示实现的主要功能,附上UI页面截图,直观展示项目成果。

1)本地rag问答

2)图片文字多模态问答

问题与解决方案:

问题分析:

1)gradio 未安装

2)路径不存在

解决措施:

1)pip install gradio -i https://pypi.tuna.tsinghua.edu.cn/simple

2)注意windows与linux路径书写规则不同,按照实际情况改正

项目总结与展望:

项目评估:存在的不足:时间紧迫,没有将tts、asr加入到项目中区

未来方向: 工程化一个自己的ai-gent, 加入tts、asr、文字、图像功能,并应用到实际场景中去。

声明

本文内容仅代表作者观点,或转载于其他网站,本站不以此文作为商业用途

如有涉及侵权,请联系本站进行删除

转载本站原创文章,请注明来源及作者。