LangChain-10(2) 加餐 编写Agent获取本地Docker运行情况 无技术含量只是思路

CSDN 2024-09-15 08:07:01 阅读 83

可以先查看 上一节内容,会对本节有更好的理解。

安装依赖

<code>pip install langchainhub

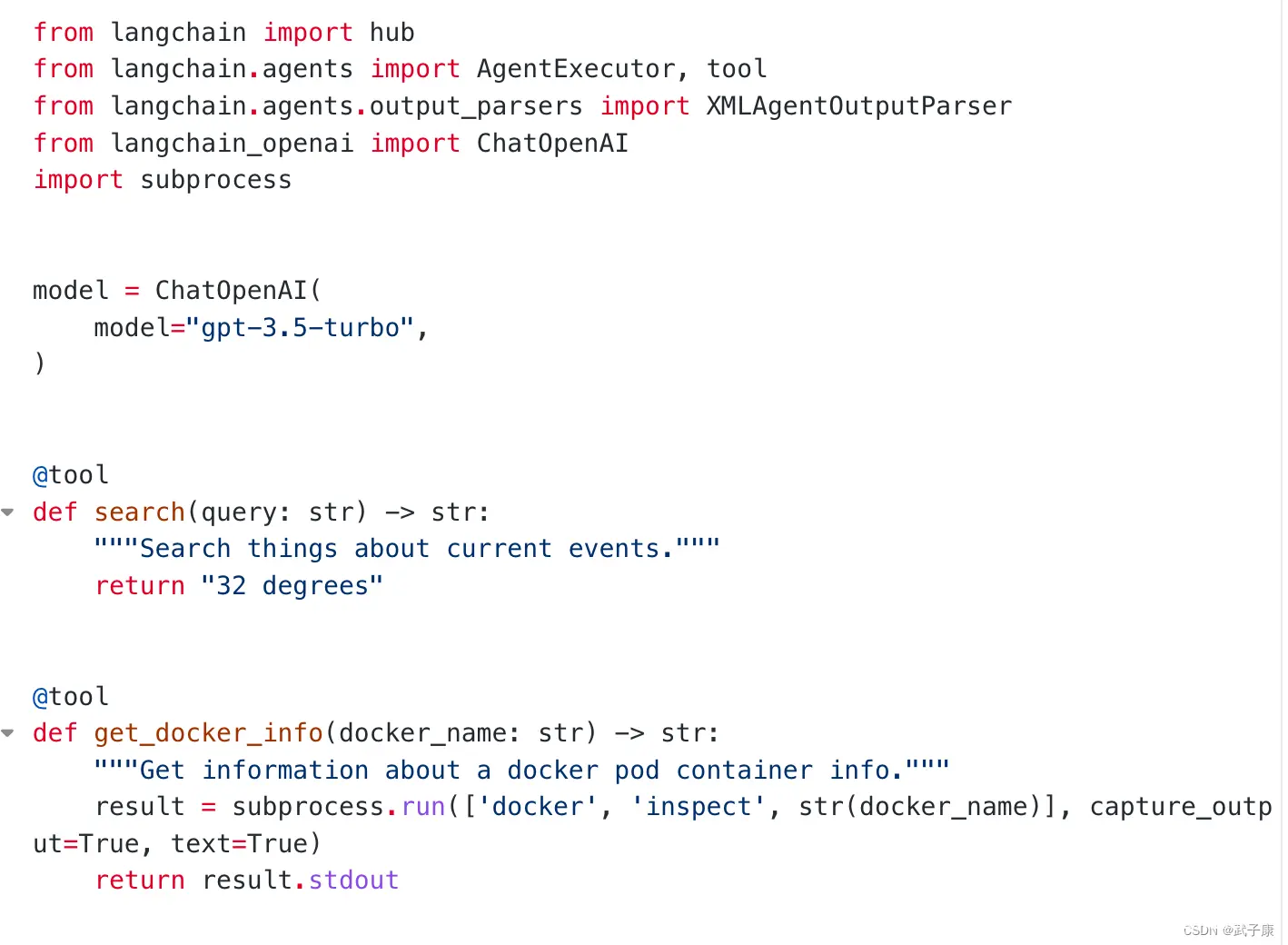

编写代码

核心代码

@tool

def get_docker_info(docker_name: str) -> str:

"""Get information about a docker pod container info."""

result = subprocess.run(['docker', 'inspect', str(docker_name)], capture_output=True, text=True)

return result.stdout

这里是通过执行 Shell的方式来获取状态的。

通过执行Docker指令之后,可以获取到一大段的文本内容,此时把这些内容交给大模型去处理,大模型对内容进行提取和推理,最终回答我们。

注意@tool注解,没有这个注解的话,无法使用注意要写"""xxx""" 要写明该工具的介绍,大模型将根据介绍来选择是否调用如果3.5的效果不好,可以尝试使用4

from langchain import hub

from langchain.agents import AgentExecutor, tool

from langchain.agents.output_parsers import XMLAgentOutputParser

from langchain_openai import ChatOpenAI

import subprocess

model = ChatOpenAI(

model="gpt-3.5-turbo",code>

)

@tool

def search(query: str) -> str:

"""Search things about current events."""

return "32 degrees"

@tool

def get_docker_info(docker_name: str) -> str:

"""Get information about a docker pod container info."""

result = subprocess.run(['docker', 'inspect', str(docker_name)], capture_output=True, text=True)

return result.stdout

tool_list = [search, get_docker_info]

# Get the prompt to use - you can modify this!

prompt = hub.pull("hwchase17/xml-agent-convo")

# Logic for going from intermediate steps to a string to pass into model

# This is pretty tied to the prompt

def convert_intermediate_steps(intermediate_steps):

log = ""

for action, observation in intermediate_steps:

log += (

f"<tool>{ -- -->action.tool}</tool><tool_input>{ action.tool_input}"

f"</tool_input><observation>{ observation}</observation>"

)

return log

# Logic for converting tools to string to go in prompt

def convert_tools(tools):

return "\n".join([f"{ tool.name}: { tool.description}" for tool in tools])

agent = (

{

"input": lambda x: x["input"],

"agent_scratchpad": lambda x: convert_intermediate_steps(

x["intermediate_steps"]

),

}

| prompt.partial(tools=convert_tools(tool_list))

| model.bind(stop=["</tool_input>", "</final_answer>"])

| XMLAgentOutputParser()

)

agent_executor = AgentExecutor(agent=agent, tools=tool_list)

message1 = agent_executor.invoke({ "input": "whats the weather in New york?"})

print(f"message1: { message1}")

message2 = agent_executor.invoke({ "input": "what is docker pod which name 'lobe-chat-wzk' info? I want to know it 'Image' url"})

print(f"message2: { message2}")

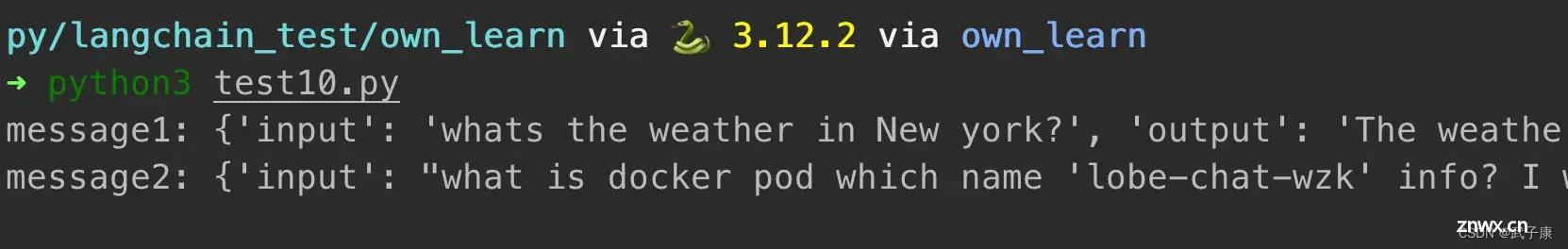

执行代码

➜ python3 test10.py

message1: { 'input': 'whats the weather in New york?', 'output': 'The weather in New York is 32 degrees'}

message2: { 'input': "what is docker pod which name 'lobe-chat-wzk' info? I want to know it 'Image' url", 'output': 'The Image URL for the docker pod named \'lobe-chat-wzk\' is "lobehub/lobe-chat"'}

上一篇: 【网络】P2P打洞原理(简单描述)

本文标签

声明

本文内容仅代表作者观点,或转载于其他网站,本站不以此文作为商业用途

如有涉及侵权,请联系本站进行删除

转载本站原创文章,请注明来源及作者。