uniapp开发WebRTC语音直播间支持app(android+IOS)和H5,并记录了所有踩得坑

叫我刘某人 2024-06-11 10:03:03 阅读 65

一、效果图

二、主要功能

1. 创建自己的语音直播间

2. 查询所有直播间列表

3.加入房间

4.申请上位

5.麦克风控制

6.声音控制

7.赠送礼物(特效 + 批量移动动画)

8.退出房间

三、原理

1.uniapp 实现客户端H5、安卓、苹果

2.webRTC实现语音直播间(具体原理网上有很多文章我就不讲了,贴个图)

3.使用node.js搭建信令服务器(我用的是socket)

4.礼物及特效使用svga

四、踩坑及解决方案

1. 客户端(这里重点在于app端)一定要在视图层创建webRTC!!!不要在逻辑层创建!!!因为会要求使用安全连接,也就是说要用到SSL证书,这个很多人都没有,有的话当我没说。如何在视图层创建RTC呢?在uniapp中使用renderjs!

<script module="webRTC" lang="renderjs">new RTCPeerConnection(iceServers)</script>

2. (这里重点也在于app)客户端创建和信令服务器进行通信的socket时app端在页面跳转后socket状态消失无法响应信令服务器消息。解决方案是:一定不要在客户端视图层创建socket!!!也就是说socket不要创建在renderjs里,要在逻辑层用uniapp提供的api进行创建,然后使用uniapp文档中说明的逻辑层和视图层的通信方式进行通信,这样虽然在开发中有些繁琐,但是能解决问题。

onShow(){// socketTask是使用uniapp提供的uni.connectSocket创建出来的socket实例// watchSocketMessage代理了socket实例的onMessage方法socketTask.watchSocketMessage = (data) => {this.watchSocketMessage(data)} }methed:{ watchSocketMessage(){ // 这里是收到信令服务器socket后的逻辑 }}

// 这里是逻辑层和renderjs通信的方式,通过监听状态的改变从而触发renderjs的对应的方法// 注意在页面刚加载完成后这些方法会被默认触发一边,所以要在这些放方法做好判断return出去<view :rid="rid" :change:rid="webRTC.initRid" :userId="userId" :change:userId="webRTC.initUserId":giftnum="giftnum" :change:giftnum="webRTC.initgiftnum" :micPosition="micPosition":change:micPosition="webRTC.initMicPositions" :giftPosition="giftPosition":change:giftPosition="webRTC.initGiftPosition" :RTCJoin="RTCJoin" :change:RTCJoin="webRTC.changeRTCjoin":RTCOffier="RTCOffier" :change:RTCOffier="webRTC.changeRTCoffier" :RTCAnswer="RTCAnswer" :isAudio="isAudio":change:isAudio="webRTC.changeIsAudio" :change:RTCAnswer="webRTC.changeRTCAnswer":RTCCandidate="RTCCandidate" :change:RTCCandidate="webRTC.changeRTCCandidate" :isTrue="isTrue":change:isTrue="webRTC.changeIsTrue" :newMess="newMess" :change:newMess="webRTC.changeNewMessage":isMedia="isMedia" :name="name" :change:name="webRTC.changeName" :change:isMedia="webRTC.changeIsMedia":animos="animos" :change:animos="changeAnimos" class="chat"></view>

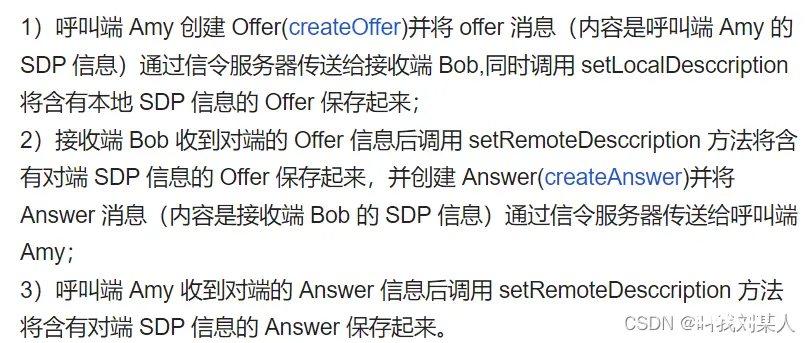

3.连接顺序的问题,一定是:新进入的用户通过信令服务器给房间已有用户发送Offer,用户接收到Offer回应Answer,记住这个逻辑!

4.因为webRTC是运行在视图层的(也就是浏览器),而苹果默认浏览器是Safari,Safari浏览器默认机制是在用户主动和页面进行交互后,自动播放声音才会生效(也就是才有声音),所以在IOS端所有用户进入直播房间后默认都是静音的,用户主动开启音频才会受到直播间的声音(这是目前我发现的最好的解决办法)

五、核心代码(只有关键步骤)

1. 客户端socket

const socketTask = {socket: null,connect: () => {getApp().globalData.socket = uni.connectSocket({url:'ws://180.76.158.110:9000/socket/websocketv',// url: 'ws://192.168.3.254:9000/socket/websocketv',complete: (e) => {console.log(e);},});getApp().globalData.socket.onOpen((data) => {console.log("111111111");getApp().globalData.socket.send({data: JSON.stringify({type: "newConnect",userId: uni.getStorageSync('user').id,})})})getApp().globalData.socket.onClose((res) => {console.log("连接关闭", res);getApp().globalData.socket = null;setTimeout(() => {socketTask.connect()}, 3000)})getApp().globalData.socket.onError((err) => {console.log("连接异常", err);getApp().globalData.socket = null;setTimeout(() => {socketTask.connect()}, 1)})getApp().globalData.socket.onMessage((data) => {socketTask.watchSocketMessage(data)})},start: function() {this.connect()},watchSocketMessage: function() {// 这里实现自己的业务逻辑}}export default socketTask

2.客户端房间列表页

async onShow() {if (!getApp().globalData.socket) {await socketTask.start();}socketTask.watchSocketMessage = (data) => {console.log("===========收到新消息==========",data);this.watchSocketMessages(data)}},methed:{// 监听socket消息watchSocketMessages(res) {try {const socket_msg = JSON.parse(res.data);console.log("收到新消息", socket_msg);switch (socket_msg.type) {case "homeList":if (socket_msg.data.length == 0) {this.homeList = [];uni.showToast({title: "暂无房间,快去创建一个吧",icon: "none"})} else {this.homeList = socket_msg.data;}breakcase "leave":getApp().globalData.socket.send({data: JSON.stringify({type: "homeList",userId: this.userInfo.userId,})})breakcase "createSuccess":uni.redirectTo({url: `broadRoom?rid=${socket_msg.data.groupId}&&userId=${this.userInfo.id}&&groupInfo=${JSON.stringify(socket_msg.data)}`})break}} catch (e) {}},}

3.客户端直播间

逻辑层:

async onShow() {const that = this;if (!getApp().globalData.socket) {console.log("socket不存在,重新连接");await socketTask.start();}socketTask.watchSocketMessage = (data) => {this.watchSocketMessage(data)}// 编译平台信息uni.getSystemInfo({success(res) {console.log("当前平台是", res);if (res.osName == 'ios') {console.log("我是ios", res)that.isMedia = 'ios';} else {console.log("我是安卓", res)that.isMedia = 'android';}}})}methed:{async watchSocketMessage(date) {const data = JSON.parse(date.data);switch (data.type) {case "join":console.log("join成功", data);this.newMessaGes(data);this.setUserList(data.admin);this.updataNewMic(data)// 找出自己以外的其他用户const arr = this.userList.filter((item, index) => {return item.userId !== this.userId})console.log("找出自己以外的其他用户", arr)// 通知renderjs层创建RTCthis.RTCJoin = arr;this.updataIsShow()breakcase "newjoin":this.newMessaGes(data);this.setUserList(data.admin);breakcase "offer"://通知renderjs层有新人进入创建answerconsole.log("收到offer", data)this.RTCOffier = data;breakcase "answer":// 找到对应peer,设置answerconsole.log("收到offer", data)this.RTCAnswer = data;breakcase "candidate":// 找到对应的peer,将candidate添加进去this.RTCCandidate = data;breakcase "leave":if (data.data == "房主已解散房间") {this.closesAdmin()} else {const datas = {data,}this.newMessaGes(datas)this.setUserList(data.admin);this.updataNewMic(data);}breakcase "apply-admin":this.updataIsApply(data.data)breakcase "newMic":this.updataNewMic(data)breakcase "uplMicro":this.updataNewMic(data)breakcase "newMessage":this.newMess = data;break}},}

视图层:

<script module="webRTC" lang="renderjs">// 以下方法都在methed:{}中// 监听changeRTCCandidateasync changeRTCCandidate(data) {if (!data) {return}console.log("this.otherPeerConnections", this.otherPeerConnections);let arrs = this.otherPeerConnections.concat(this.myPeerConnections);if (arrs.length == 0) {return}let peerr = arrs.filter(item => {return item.otherId == data.userId})if (peerr[0].peer == {}) {return} else {console.log("candidatecandidate", data.candidate)await peerr[0].peer.addIceCandidate(new RTCIceCandidate(data.candidate))}},// 监听answer,找到对应peer设置answerasync changeRTCAnswer(data) {if (!data) {return}let peers = this.myPeerConnections.filter(item => {return item.otherId == data.userId})console.log("peers[0]", peers[0])await peers[0].peer.setRemoteDescription(new RTCSessionDescription(data.answer))},// 监听offier,RTCAnswer的创建async changeRTCoffier(data) {if (!data) {return}let pear = null;try {pear = new RTCPeerConnection(iceServers);} catch (e) {console.log("实例化RTC-pear失败", e);}// 将音频流加入到Peer中this.localStream.getAudioTracks()[0].enabled = this.isTrue;this.localStream.getTracks().forEach((track) => pear.addTrack(track, this.localStream));this.otherPeerConnections.push({peer: pear,otherId: data.userId})//当远程用户向对等连接添加流时,我们将显示它pear.ontrack = (event) => {// 为该用户创建audioconst track = event.track || event.streams[0]?.getTracks()[0];if (track && track.kind === 'audio') {console.log("存在音轨", event.streams[0]);this.renderAudio(data.userId, event.streams[0]);} else {console.warn("No audio track found in the received stream.");}};// 通过监听onicecandidate事件获取candidate信息pear.onicecandidate = async (event) => {if (event.candidate) {// 通过信令服务器发送candidate信息给用户Bawait this.$ownerInstance.callMethod("sendCandidate", {type: "candidate",userId: this.userId,rid: this.rid,msg: event.candidate,formUserId: data.userId,})}}pear.setRemoteDescription(new RTCSessionDescription(data.offer))// 接收端创建answer并发送给发起端pear.createAnswer().then(answer => {pear.setLocalDescription(answer);// 通知serve层给房间用户发送answerthis.$ownerInstance.callMethod("sendAnswer", {type: "answer",userId: this.userId,rid: this.rid,msg: answer,formUserId: data.userId,})})},// 发起连接申请,offier的创建changeRTCjoin(RTCjoin) {if (!RTCjoin) {return}RTCjoin.forEach((item, index) => {let peer = null;try {peer = new RTCPeerConnection(iceServers);} catch (e) {console.log("实例化RTC失败", e);}this.localStream.getAudioTracks()[0].enabled = this.isTrue;this.localStream.getTracks().forEach((track) => peer.addTrack(track, this.localStream));peer.ontrack = (event) => {console.log("发起连接申请,offier的创建:peer.ontrack");const track = event.track || event.streams[0]?.getTracks()[0];if (track && track.kind === 'audio') {console.log("存在音轨2", event.streams[0]);this.renderAudio(item.userId, event.streams[0]);} else {console.warn("No audio track found in the received stream.");}};// 通过监听onicecandidate事件获取candidate信息peer.onicecandidate = (event) => {if (event.candidate) {// 通过信令服务器发送candidate信息给用户Bthis.$ownerInstance.callMethod("sendCandidate", {type: "candidate",userId: this.userId,rid: this.rid,msg: event.candidate,formUserId: item.userId,})}}this.myPeerConnections.push({peer: peer,otherId: item.userId})peer.createOffer(this.offerOptions).then(offer => {peer.setLocalDescription(offer);// 通知serve层给房间用户发送offierthis.$ownerInstance.callMethod("sendOffier", {type: "offer",userId: this.userId,rid: this.rid,msg: offer,formUserId: item.userId,})})})},renderAudio(uid, stream) {let audio2 = document.getElementById(`audio_${uid}`);console.log("audio_name", `audio_${uid}`);if (!audio2) {audio2 = document.createElement('audio');audio2.id = `audio_${uid}`;audio2.setAttribute("webkit-playsinline", "");audio2.setAttribute("autoplay", true);audio2.setAttribute("playsinline", "");audio2.onloadedmetadata = () => {if (this.isAudio == 1) {console.log("不自动播放");audio2.pause();} else {audio2.play();}};this.audioList.push(audio2)}if ("srcObject" in audio2) {console.log("使用了srcObject赋值");audio2.srcObject = stream;} else {console.log("找不到srcObject赋值");audio2.src = window.URL.createObjectURL(stream);}},async initMedia() {const that = this;console.log("##########", this.isMedia);// #ifdef APP-PLUSif (this.isMedia == 'android') {console.log("androidandroidandroidandroid");await plus.android.requestPermissions(['android.permission.RECORD_AUDIO'],async (resultObj) => {var result = 0;for (var i = 0; i < resultObj.granted.length; i++) {var grantedPermission = resultObj.granted[i];result = 1}for (var i = 0; i < resultObj.deniedPresent.length; i++) {var deniedPresentPermission = resultObj.deniedPresent[i];result = 0}for (var i = 0; i < resultObj.deniedAlways.length; i++) {var deniedAlwaysPermission = resultObj.deniedAlways[i];result = -1}that.localStream = await that.getUserMedia();that.$ownerInstance.callMethod("sendJoin", {type: "join",userId: that.userId,rid: that.rid,name: that.name})},function(error) {console.log("导入android出现错误", error);});} else {console.log("iosiosiosiosiosios");that.localStream = await that.getUserMedia().catch(err => {console.log("出错了", err);})that.$ownerInstance.callMethod("sendJoin", {type: "join",userId: that.userId,rid: that.rid,name: that.name})}// #endif// #ifdef H5that.localStream = await that.getUserMedia();// 通知serve层加入成功this.$ownerInstance.callMethod("sendJoin", {type: "join",userId: this.userId,rid: this.rid,name: this.name})// #endif},getUserMedia(then) {return new Promise((resolve, reject) => {navigator.mediaDevices.getUserMedia(this.mediaConstraints).then((stream) => {return resolve(stream);}).catch(err => {if (err.name === 'NotAllowedError' || err.name === 'PermissionDeniedError') {// 用户拒绝了授权reject(new Error('用户拒绝了访问摄像头和麦克风的请求'));} else if (err.name === 'NotFoundError' || err.name === 'DevicesNotFoundError') {// 没有找到摄像头或麦克风reject(new Error('没有找到摄像头或麦克风'));} else if (err.name === 'NotReadableError' || err.name === 'TrackStartError') {// 摄像头或麦克风不可读reject(new Error('摄像头或麦克风不可读'));} else if (err.name === 'OverconstrainedError' || err.name ==='ConstraintNotSatisfiedError') {// 由于媒体流的约束条件无法满足,请求被拒绝reject(new Error('请求被拒绝,由于媒体流的约束条件无法满足'));} else if (err.name === 'TypeError' || err.name === 'TypeError') {// 发生了类型错误reject(new Error('发生了类型错误'));} else {// 其他未知错误reject(new Error('发生了未知错误'));}})});},</script>

4.信令服务器

略(就是socket,里面写swich,不会私信,小额收费)

声明

本文内容仅代表作者观点,或转载于其他网站,本站不以此文作为商业用途

如有涉及侵权,请联系本站进行删除

转载本站原创文章,请注明来源及作者。