Stable Diffusion | Gradio界面设计及webUI API调用

旭_1994 2024-10-20 17:03:02 阅读 79

本文基于webUI API编写了类似于webUI的Gradio交互式界面,支持文生图/图生图(SD1.x,SD2.x,SDXL),Embedding,Lora,X/Y/Z Plot,ADetailer、ControlNet,超分放大(Extras),图片信息读取(PNG Info)。

1. 在线体验

本文代码已部署到百度飞桨AI Studio平台,以供大家在线体验Stable Diffusion ComfyUI/webUI 原版界面及自制Gradio界面。

项目链接:Stable Diffusion webUI 在线体验

2. 自制Gradio界面展示

文生图界面:

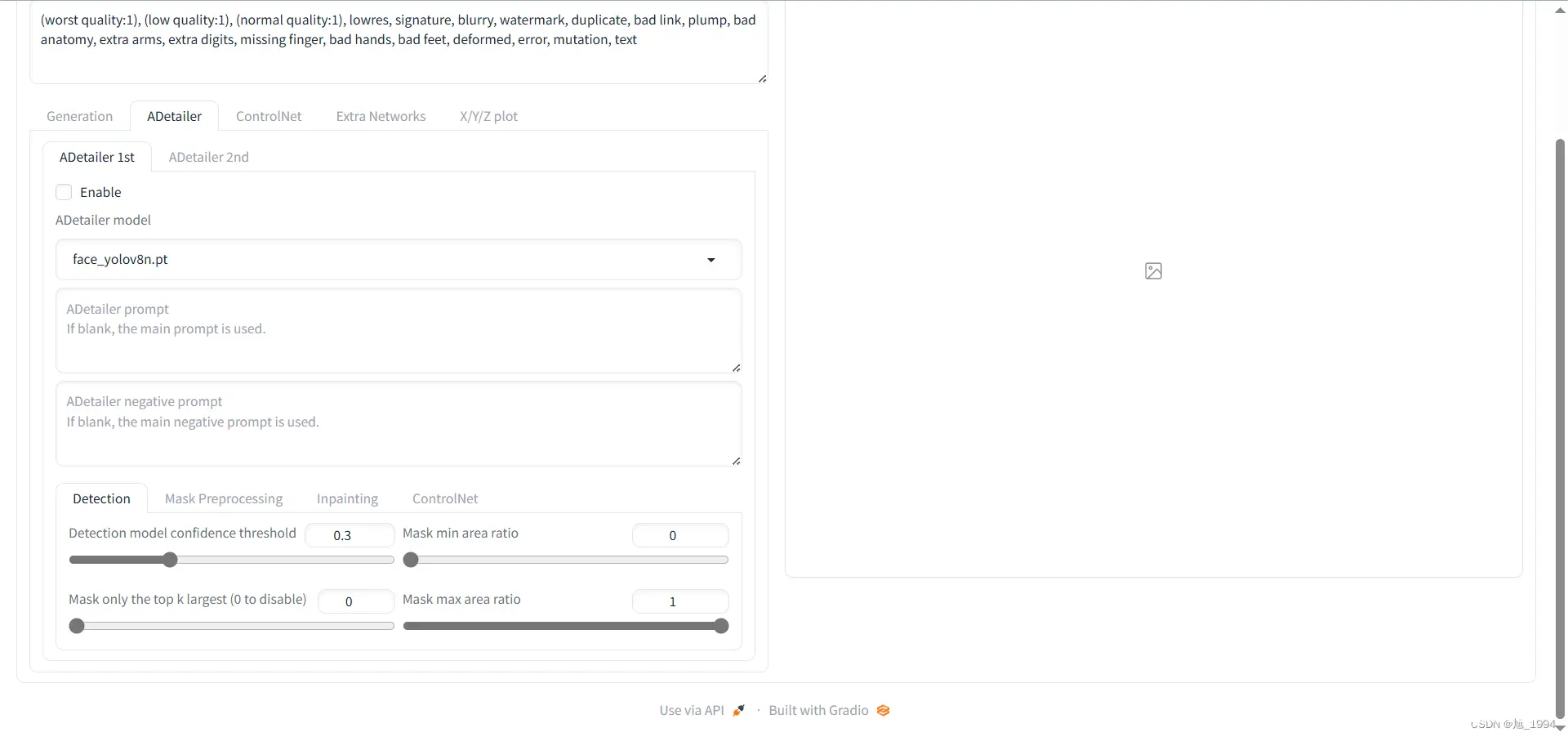

Adetailer 设置界面:

ControlNet 设置界面:

X/Y/Z Plot 设置界面:

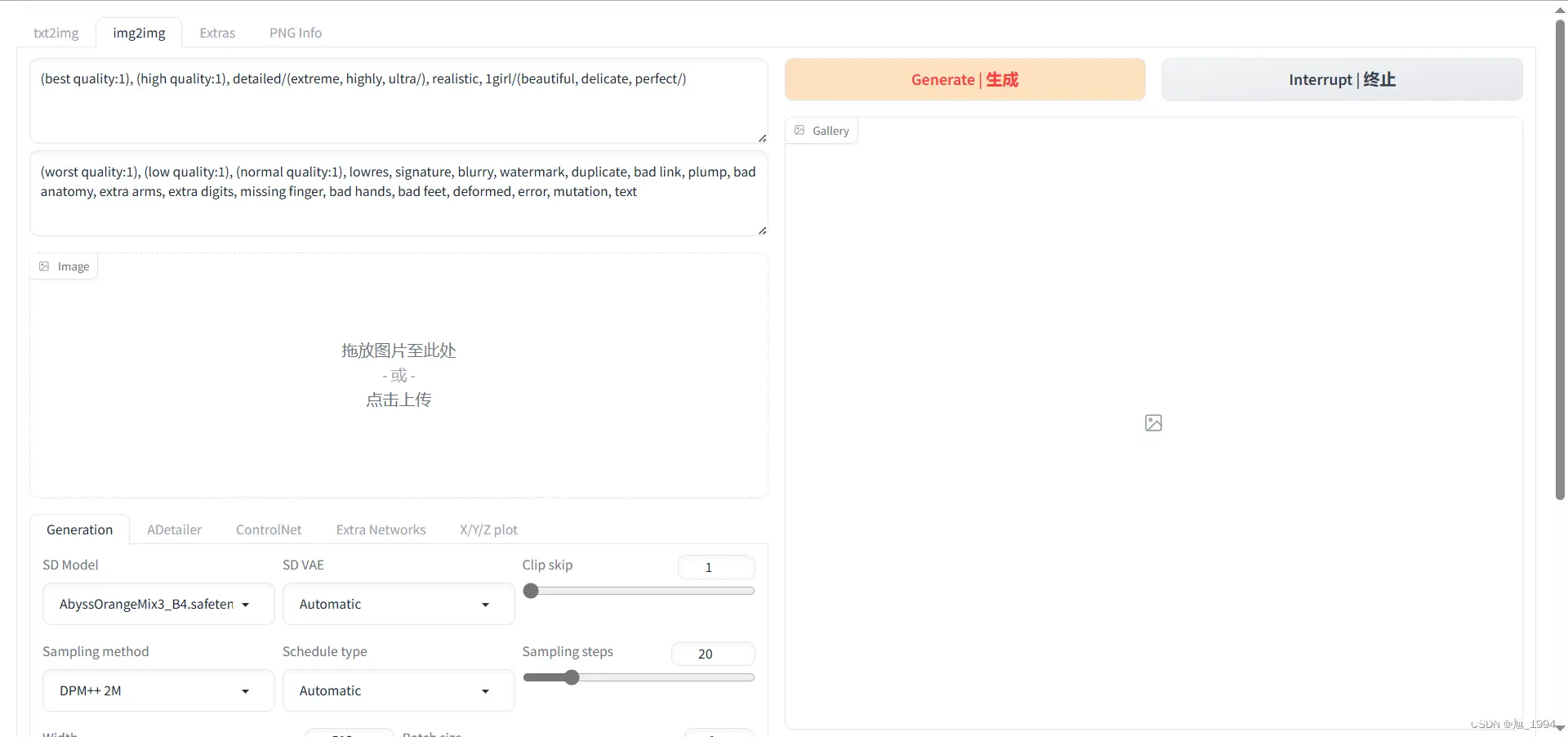

图生图界面:

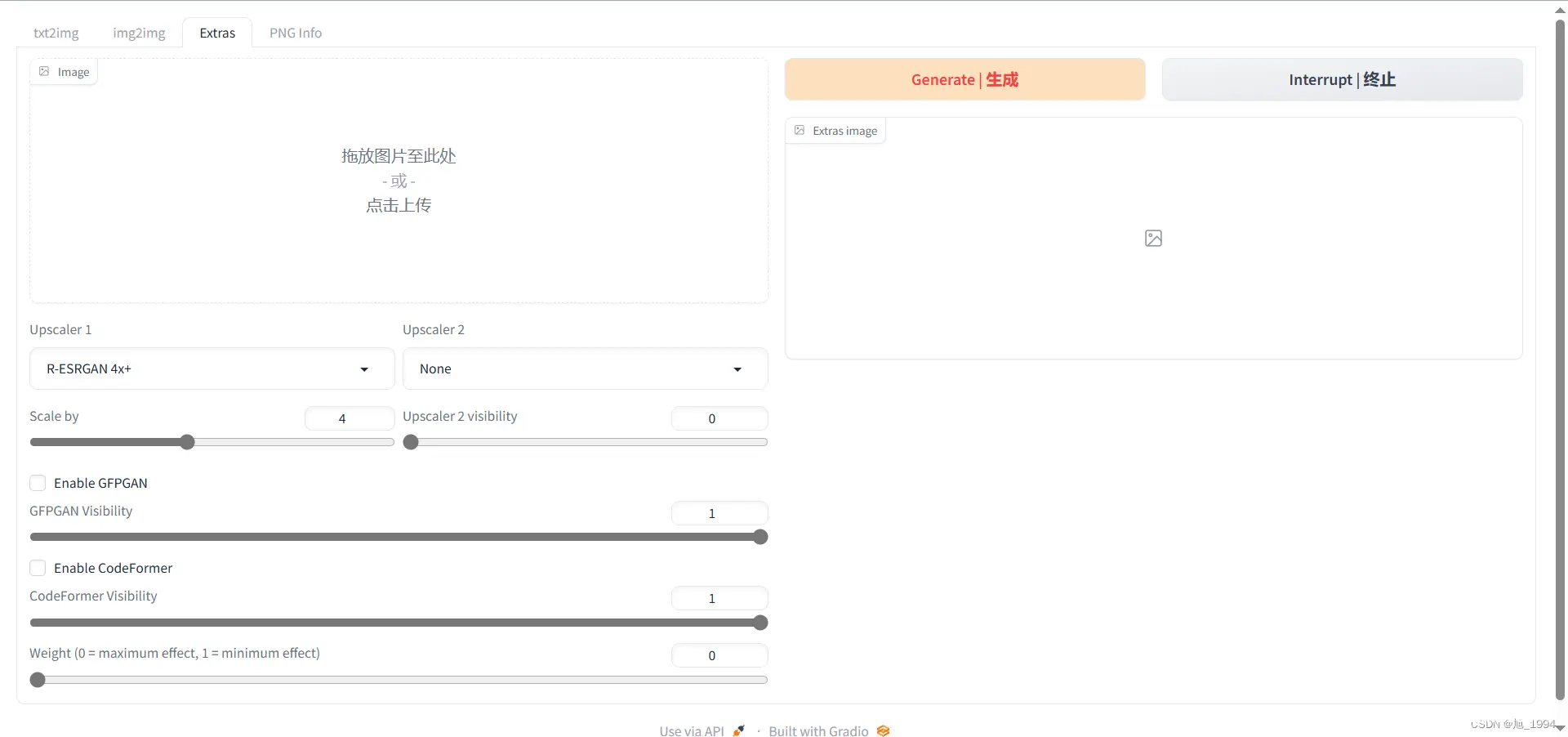

图片放大界面:

图片信息读取界面:

3. Gradio界面设计及webUI API调用

<code>import base64

import datetime

import io

import os

import re

import subprocess

import gradio as gr

import requests

from PIL import Image, PngImagePlugin

design_mode = 1

save_images = "Yes"

url = "http://127.0.0.1:7860"

if design_mode == 0:

cmd = "netstat -tulnp"

netstat_output = subprocess.run(cmd, shell=True, stdout=subprocess.PIPE, stderr=subprocess.PIPE, text=True).stdout.splitlines()

for i in netstat_output:

if "stable-diffus" in i:

port = int(re.findall(r'\d+', i)[6])

url = f"http://127.0.0.1:{port}"

output_dir = os.getcwd() + "/output/" + datetime.date.today().strftime("%Y-%m-%d")

os.makedirs(output_dir, exist_ok=True)

os.environ["GRADIO_ANALYTICS_ENABLED"] = "False"

default = {

"prompt": "(best quality:1), (high quality:1), detailed/(extreme, highly, ultra/), realistic, 1girl/(beautiful, delicate, perfect/)",

"negative_prompt": "(worst quality:1), (low quality:1), (normal quality:1), lowres, signature, blurry, watermark, duplicate, bad link, plump, bad anatomy, extra arms, extra digits, missing finger, bad hands, bad feet, deformed, error, mutation, text",

"clip_skip": 1,

"width": 512,

"height": 768,

"size_step": 64,

"steps": 20,

"cfg": 7,

"ad_nums": 2,

"ad_model": ["face_yolov8n.pt", "hand_yolov8n.pt"],

"cn_nums": 3,

"cn_type": "Canny",

"gallery_height": 600,

"lora_weight": 0.8,

"hidden_models": ["stable_cascade_stage_c", "stable_cascade_stage_b", "svd_xt_1_1", "control_v11p_sd15_canny", "control_v11f1p_sd15_depth", "control_v11p_sd15_openpose"]

}

samplers = []

response = requests.get(url=f"{url}/sdapi/v1/samplers").json()

for i in range(len(response)):

samplers.append(response[i]["name"])

schedulers = []

response = requests.get(url=f"{url}/sdapi/v1/schedulers").json()

for i in range(len(response)):

schedulers.append(response[i]["label"])

upscalers = []

response = requests.get(url=f"{url}/sdapi/v1/upscalers").json()

for i in range(len(response)):

upscalers.append(response[i]["name"])

sd_models = []

sd_models_list = {}

response = requests.get(url=f"{url}/sdapi/v1/sd-models").json()

for i in range(len(response)):

path, sd_model = os.path.split(response[i]["title"])

sd_model_name, sd_model_extension = os.path.splitext(sd_model)

if not sd_model_name in default["hidden_models"]:

sd_models.append(sd_model)

sd_models_list[sd_model] = response[i]["title"]

sd_models = sorted(sd_models)

sd_vaes = ["Automatic", "None"]

response = requests.get(url=f"{url}/sdapi/v1/sd-vae").json()

for i in range(len(response)):

sd_vaes.append(response[i]["model_name"])

embeddings = []

response = requests.get(url=f"{url}/sdapi/v1/embeddings").json()

for key in response["loaded"]:

embeddings.append(key)

extensions = []

response = requests.get(url=f"{url}/sdapi/v1/extensions").json()

for i in range(len(response)):

extensions.append(response[i]["name"])

loras = []

loras_name = {}

loras_activation_text = {}

response = requests.get(url=f"{url}/sdapi/v1/loras").json()

for i in range(len(response)):

lora_name = response[i]["name"]

lora_info = requests.get(url=f"{url}/tacapi/v1/lora-info/{lora_name}").json()

if lora_info and "sd version" in lora_info:

lora_type = lora_info["sd version"]

lora_name_type = f"{lora_name} ({lora_type})"

else:

lora_name_type = f"{lora_name}"

loras.append(lora_name_type)

loras_name[lora_name_type] = lora_name

if "activation text" in loras_activation_text:

loras_activation_text[lora_name_type] = lora_info["activation text"]

xyz_args = {}

xyz_plot_types = {}

last_choice = "Size"

response = requests.get(url=f"{url}/sdapi/v1/script-info").json()

for i in range(len(response)):

if response[i]["name"] == "x/y/z plot":

if response[i]["is_img2img"] == False:

xyz_plot_types["txt2img"] = response[i]["args"][0]["choices"]

choice_index = xyz_plot_types["txt2img"].index(last_choice) + 1

xyz_plot_types["txt2img"] = xyz_plot_types["txt2img"][:choice_index]

else:

xyz_plot_types["img2img"] = response[i]["args"][0]["choices"]

choice_index = xyz_plot_types["img2img"].index(last_choice) + 1

xyz_plot_types["img2img"] = xyz_plot_types["img2img"][:choice_index]

if "adetailer" in extensions:

ad_args = {"txt2img": {}, "img2img": {}}

ad_skip_img2img = False

ad_models = ["None"]

response = requests.get(url=f"{url}/adetailer/v1/ad_model").json()

for key in response["ad_model"]:

ad_models.append(key)

if "sd-webui-controlnet" in extensions:

cn_args = {"txt2img": {}, "img2img": {}}

cn_types = []

cn_types_list = {}

response = requests.get(url=f"{url}/controlnet/control_types").json()

for key in response["control_types"]:

cn_types.append(key)

cn_types_list[key] = response["control_types"][key]

cn_default_type = default["cn_type"]

cn_module_list = cn_types_list[cn_default_type]["module_list"]

cn_model_list = cn_types_list[cn_default_type]["model_list"]

cn_default_option = cn_types_list[cn_default_type]["default_option"]

cn_default_model = cn_types_list[cn_default_type]["default_model"]

def save_image(image, part1, part2):

counter = 1

image_name = f"{part1}-{part2}-{counter}.png"

while os.path.exists(os.path.join(output_dir, image_name)):

counter += 1

image_name = f"{part1}-{part2}-{counter}.png"

image_path = os.path.join(output_dir, image_name)

image_metadata = PngImagePlugin.PngInfo()

for key, value in image.info.items():

if isinstance(key, str) and isinstance(value, str):

image_metadata.add_text(key, value)

image.save(image_path, format="PNG", pnginfo=image_metadata)code>

def pil_to_base64(image_pil):

buffer = io.BytesIO()

image_pil.save(buffer, format="png")code>

image_buffer = buffer.getbuffer()

image_base64 = base64.b64encode(image_buffer).decode("utf-8")

return image_base64

def base64_to_pil(image_base64):

image_binary = base64.b64decode(image_base64)

image_pil = Image.open(io.BytesIO(image_binary))

return image_pil

def format_prompt(prompt):

prompt = re.sub(r"\s+,", ",", prompt)

prompt = re.sub(r"\s+", " ", prompt)

prompt = re.sub(",,+", ",", prompt)

prompt = re.sub(",", ", ", prompt)

prompt = re.sub(r"\s+", " ", prompt)

prompt = re.sub(r"^,", "", prompt)

prompt = re.sub(r"^ ", "", prompt)

prompt = re.sub(r" $", "", prompt)

prompt = re.sub(r",$", "", prompt)

prompt = re.sub(": ", ":", prompt)

return prompt

def post_interrupt():

global interrupt

interrupt = True

requests.post(url=f"{url}/sdapi/v1/interrupt").json()

def gr_update_visible(visible):

return gr.update(visible=visible)

def ordinal(n: int) -> str:

d = {1: "st", 2: "nd", 3: "rd"}

return str(n) + ("th" if 11 <= n % 100 <= 13 else d.get(n % 10, "th"))

def add_lora(prompt, lora):

lora_weight = default["lora_weight"]

prompt = re.sub(r"<[^<>]+>", "", prompt)

for elem in loras_activation_text:

prompt = re.sub(loras_activation_text[elem], "", prompt)

prompt = format_prompt(prompt)

for elem in lora:

lora_name = loras_name[elem]

if elem in loras_activation_text:

lora_activation_text = loras_activation_text[elem]

else:

lora_activation_text = ""

if lora_activation_text == "":

prompt = f"{prompt}, <lora:{lora_name}:{lora_weight}>"

else:

prompt = f"{prompt}, <lora:{lora_name}:{lora_weight}> {lora_activation_text}"

return prompt

def add_embedding(negative_prompt, embedding):

for elem in embeddings:

negative_prompt = re.sub(f"{elem},", "", negative_prompt)

negative_prompt = format_prompt(negative_prompt)

for elem in embedding[::-1]:

negative_prompt = f"{elem}, {negative_prompt}"

return negative_prompt

def add_xyz_plot(payload, gen_type):

global xyz_args

if gen_type in xyz_args:

payload["script_name"] = "X/Y/Z plot"

payload["script_args"] = xyz_args[gen_type]

return payload

def xyz_update_args(*args):

gen_type, enable_xyz_plot, x_type, x_values, x_values_dropdown, y_type, y_values, y_values_dropdown, z_type, z_values, z_values_dropdown, draw_legend, include_lone_images, include_sub_grids, no_fixed_seeds, vary_seeds_x, vary_seeds_y, vary_seeds_z, margin_size, csv_mode = args

global xyz_args

x_type = xyz_plot_types[gen_type].index(x_type)

y_type = xyz_plot_types[gen_type].index(y_type)

z_type = xyz_plot_types[gen_type].index(z_type)

args = [x_type, x_values, x_values_dropdown, y_type, y_values, y_values_dropdown, z_type, z_values, z_values_dropdown, draw_legend, include_lone_images, include_sub_grids, no_fixed_seeds, vary_seeds_x, vary_seeds_y, vary_seeds_z, margin_size, csv_mode]

if enable_xyz_plot == True:

xyz_args[gen_type] = args

else:

del xyz_args[gen_type]

def xyz_update_choices(xyz_type):

choices = []

if xyz_type == "Checkpoint name":

choices = sd_models

if xyz_type == "VAE":

choices = sd_vaes

if xyz_type == "Sampler":

choices = samplers

if xyz_type == "Schedule type":

choices = schedulers

if xyz_type == "Hires sampler":

choices = samplers

if xyz_type == "Hires upscaler":

choices = upscalers

if xyz_type == "Always discard next-to-last sigma":

choices = ["False", "True"]

if xyz_type == "SGM noise multiplier":

choices = ["False", "True"]

if xyz_type == "Refiner checkpoint":

choices = sd_models

if xyz_type == "RNG source":

choices = ["GPU", "CPU", "NV"]

if xyz_type == "FP8 mode":

choices = ["Disable", "Enable for SDXL", "Enable"]

if choices == []:

return gr.update(visible=True, value=None), gr.update(visible=False)

else:

return gr.update(visible=False), gr.update(visible=True, choices=choices)

def xyz_blocks(gen_type):

with gr.Blocks() as demo:

with gr.Row():

xyz_gen_type = gr.Textbox(visible=False, value=gen_type)

enable_xyz_plot = gr.Checkbox(label="Enable")code>

with gr.Row():

x_type = gr.Dropdown(xyz_plot_types[gen_type], label="X type", value=xyz_plot_types[gen_type][1])code>

x_values = gr.Textbox(label="X values", lines=1)code>

x_values_dropdown = gr.Dropdown(label="X values", visible=False, multiselect=True, interactive=True)code>

with gr.Row():

y_type = gr.Dropdown(xyz_plot_types[gen_type], label="Y type", value=xyz_plot_types[gen_type][0])code>

y_values = gr.Textbox(label="Y values", lines=1)code>

y_values_dropdown = gr.Dropdown(label="Y values", visible=False, multiselect=True, interactive=True)code>

with gr.Row():

z_type = gr.Dropdown(xyz_plot_types[gen_type], label="Z type", value=xyz_plot_types[gen_type][0])code>

z_values = gr.Textbox(label="Z values", lines=1)code>

z_values_dropdown = gr.Dropdown(label="Z values", visible=False, multiselect=True, interactive=True)code>

with gr.Row():

with gr.Column():

draw_legend = gr.Checkbox(label='Draw legend', value=True)code>

no_fixed_seeds = gr.Checkbox(label='Keep -1 for seeds', value=False)code>

vary_seeds_x = gr.Checkbox(label='Vary seeds for X', value=False)code>

vary_seeds_y = gr.Checkbox(label='Vary seeds for Y', value=False)code>

vary_seeds_z = gr.Checkbox(label='Vary seeds for Z', value=False)code>

with gr.Column():

include_lone_images = gr.Checkbox(label='Include Sub Images', value=True)code>

include_sub_grids = gr.Checkbox(label='Include Sub Grids', value=False)code>

csv_mode = gr.Checkbox(label='Use text inputs instead of dropdowns', value=False)code>

margin_size = gr.Slider(label="Grid margins (px)", minimum=0, maximum=500, value=0, step=2)code>

x_type.change(fn=xyz_update_choices, inputs=x_type, outputs=[x_values, x_values_dropdown])

y_type.change(fn=xyz_update_choices, inputs=y_type, outputs=[y_values, y_values_dropdown])

z_type.change(fn=xyz_update_choices, inputs=z_type, outputs=[z_values, z_values_dropdown])

xyz_inputs = [xyz_gen_type, enable_xyz_plot, x_type, x_values, x_values_dropdown, y_type, y_values, y_values_dropdown, z_type, z_values, z_values_dropdown, draw_legend, include_lone_images, include_sub_grids, no_fixed_seeds, vary_seeds_x, vary_seeds_y, vary_seeds_z, margin_size, csv_mode]

for gr_block in xyz_inputs:

if type(gr_block) is gr.components.slider.Slider:

gr_block.release(fn=xyz_update_args, inputs=xyz_inputs, outputs=None)

else:

gr_block.change(fn=xyz_update_args, inputs=xyz_inputs, outputs=None)

return demo

def add_adetailer(payload, gen_type):

global ad_args, ad_skip_img2img

args = ad_args[gen_type]

args = dict(sorted(args.items(), key=lambda x: x[0]))

payload["alwayson_scripts"]["adetailer"] = {"args": []}

if args == {}:

return payload

if gen_type == "img2img":

payload["alwayson_scripts"]["adetailer"]["args"] = [True, ad_skip_img2img]

else:

payload["alwayson_scripts"]["adetailer"]["args"] = [True, False]

for i in args:

payload["alwayson_scripts"]["adetailer"]["args"].append(args[i])

return payload

def ad_update_args(*args):

if "sd-webui-controlnet" in extensions:

ad_gen_type, ad_num, enable_ad, ad_model, ad_prompt, ad_negative_prompt, ad_confidence, ad_mask_min_ratio, ad_mask_k_largest, ad_mask_max_ratio, ad_x_offset, ad_y_offset, ad_dilate_erode, ad_mask_merge_invert, ad_mask_blur, ad_denoising_strength, ad_inpaint_only_masked, ad_use_inpaint_width_height, ad_inpaint_only_masked_padding, ad_inpaint_width, ad_inpaint_height, ad_use_steps, ad_use_cfg_scale, ad_steps, ad_cfg_scale, ad_use_checkpoint, ad_use_vae, ad_checkpoint, ad_vae, ad_use_sampler, ad_sampler, ad_scheduler, ad_use_noise_multiplier, ad_use_clip_skip, ad_noise_multiplier, ad_clip_skip, ad_restore_face, ad_controlnet_model, ad_controlnet_module, ad_controlnet_weight, ad_controlnet_guidance_start, ad_controlnet_guidance_end = args

else:

ad_gen_type, ad_num, enable_ad, ad_model, ad_prompt, ad_negative_prompt, ad_confidence, ad_mask_min_ratio, ad_mask_k_largest, ad_mask_max_ratio, ad_x_offset, ad_y_offset, ad_dilate_erode, ad_mask_merge_invert, ad_mask_blur, ad_denoising_strength, ad_inpaint_only_masked, ad_use_inpaint_width_height, ad_inpaint_only_masked_padding, ad_inpaint_width, ad_inpaint_height, ad_use_steps, ad_use_cfg_scale, ad_steps, ad_cfg_scale, ad_use_checkpoint, ad_use_vae, ad_checkpoint, ad_vae, ad_use_sampler, ad_sampler, ad_scheduler, ad_use_noise_multiplier, ad_use_clip_skip, ad_noise_multiplier, ad_clip_skip, ad_restore_face = args

global ad_args

args = {

"ad_model": ad_model,

"ad_model_classes": "",

"ad_prompt": ad_prompt,

"ad_negative_prompt": ad_negative_prompt,

"ad_confidence": ad_confidence,

"ad_mask_k_largest": ad_mask_k_largest,

"ad_mask_min_ratio": ad_mask_min_ratio,

"ad_mask_max_ratio": ad_mask_max_ratio,

"ad_dilate_erode": ad_dilate_erode,

"ad_x_offset": ad_x_offset,

"ad_y_offset": ad_y_offset,

"ad_mask_merge_invert": ad_mask_merge_invert,

"ad_mask_blur": ad_mask_blur,

"ad_denoising_strength": ad_denoising_strength,

"ad_inpaint_only_masked": ad_inpaint_only_masked,

"ad_inpaint_only_masked_padding": ad_inpaint_only_masked_padding,

"ad_use_inpaint_width_height": ad_use_inpaint_width_height,

"ad_inpaint_width": ad_inpaint_width,

"ad_inpaint_height": ad_inpaint_height,

"ad_use_steps": ad_use_steps,

"ad_steps": ad_steps,

"ad_use_cfg_scale": ad_use_cfg_scale,

"ad_cfg_scale": ad_cfg_scale,

"ad_use_checkpoint": ad_use_checkpoint,

"ad_checkpoint": ad_checkpoint,

"ad_use_vae": ad_use_vae,

"ad_vae": ad_vae,

"ad_use_sampler": ad_use_sampler,

"ad_sampler": ad_sampler,

"ad_scheduler": ad_scheduler,

"ad_use_noise_multiplier": ad_use_noise_multiplier,

"ad_noise_multiplier": ad_noise_multiplier,

"ad_use_clip_skip": ad_use_clip_skip,

"ad_clip_skip": ad_clip_skip,

"ad_restore_face": ad_restore_face,

}

if "sd-webui-controlnet" in extensions:

args["ad_controlnet_model"] = ad_controlnet_model

args["ad_controlnet_module"] = ad_controlnet_module

args["ad_controlnet_weight"] = ad_controlnet_weight

args["ad_controlnet_guidance_start"] = ad_controlnet_guidance_start

args["ad_controlnet_guidance_end"] = ad_controlnet_guidance_end

if enable_ad == True:

ad_args[ad_gen_type][ad_num] = args

else:

del ad_args[ad_gen_type][ad_num]

def ad_update_cn_module_choices(ad_controlnet_model):

if ad_controlnet_model == "control_v11f1p_sd15_depth [1a8eb83c]":

return gr.update(choices=["depth_midas", "depth_hand_refiner"], visible=True, value="depth_midas")code>

if ad_controlnet_model == "control_v11p_sd15_inpaint [dfe64acb]":

return gr.update(choices=["inpaint_global_harmonious", "inpaint_only", "inpaint_only+lama"], visible=True, value="inpaint_global_harmonious")code>

if ad_controlnet_model == "control_v11p_sd15_lineart [2c3004a6]":

return gr.update(choices=["lineart_coarse", "lineart_realistic", "lineart_anime", "lineart_anime_denoise"], visible=True, value="lineart_coarse")code>

if ad_controlnet_model == "control_v11p_sd15_openpose [52e0ea54]":

return gr.update(choices=["openpose_full", "dw_openpose_full"], visible=True, value="openpose_full")code>

if ad_controlnet_model == "control_v11p_sd15_scribble [46a6fcd7]":

return gr.update(choices=["t2ia_sketch_pidi"], visible=True, value="t2ia_sketch_pidi")code>

if ad_controlnet_model == "control_v11p_sd15s2_lineart_anime [19a26aa8]":

return gr.update(choices=["lineart_coarse", "lineart_realistic", "lineart_anime", "lineart_anime_denoise"], visible=True, value="lineart_coarse")code>

return gr.update(visible=False)

def ad_update_skip_img2img(arg):

global ad_skip_img2img

ad_skip_img2img = arg

def ad_blocks(i, gen_type):

with gr.Blocks() as demo:

ad_gen_type = gr.Textbox(visible=False, value=gen_type)

ad_num = gr.Textbox(visible=False, value=i)

enable_ad = gr.Checkbox(label="Enable")code>

ad_model = gr.Dropdown(ad_models, label="ADetailer model", value=default["ad_model"][i])code>

ad_prompt = gr.Textbox(show_label=False, placeholder="ADetailer prompt" + "\nIf blank, the main prompt is used.", lines=3)code>

ad_negative_prompt = gr.Textbox(show_label=False, placeholder="ADetailer negative prompt" + "\nIf blank, the main negative prompt is used.", lines=3)code>

with gr.Tab("Detection"):

with gr.Row():

ad_confidence = gr.Slider(label="Detection model confidence threshold", minimum=0, maximum=1, step=0.01, value=0.3)code>

ad_mask_min_ratio = gr.Slider(label="Mask min area ratio", minimum=0, maximum=1, step=0.001, value=0)code>

with gr.Row():

ad_mask_k_largest = gr.Slider(label="Mask only the top k largest (0 to disable)", minimum=0, maximum=10, step=1, value=0)code>

ad_mask_max_ratio = gr.Slider(label="Mask max area ratio", minimum=0, maximum=1, step=0.001, value=1)code>

with gr.Tab("Mask Preprocessing"):

with gr.Row():

ad_x_offset = gr.Slider(label="Mask x(→) offset", minimum=-200, maximum=200, step=1, value=0)code>

ad_y_offset = gr.Slider(label="Mask y(↑) offset", minimum=-200, maximum=200, step=1, value=0)code>

ad_dilate_erode = gr.Slider(label="Mask erosion (-) / dilation (+)", minimum=-128, maximum=128, step=4, value=4)code>

ad_mask_merge_invert = gr.Radio(["None", "Merge", "Merge and Invert"], label="Mask merge mode", value="None")code>

with gr.Tab("Inpainting"):

with gr.Row():

ad_mask_blur = gr.Slider(label="Inpaint mask blur", minimum=0, maximum=64, step=1, value=4)code>

ad_denoising_strength = gr.Slider(label="Inpaint denoising strength", minimum=0, maximum=1, step=0.01, value=0.4)code>

with gr.Row():

ad_inpaint_only_masked = gr.Checkbox(label="Inpaint only masked", value=True)code>

ad_use_inpaint_width_height = gr.Checkbox(label="Use separate width/height")code>

with gr.Row():

ad_inpaint_only_masked_padding = gr.Slider(label="Inpaint only masked padding, pixels", minimum=0, maximum=256, step=4, value=32)code>

with gr.Column():

ad_inpaint_width = gr.Slider(label="inpaint width", minimum=64, maximum=2048, step=default["size_step"], value=512)code>

ad_inpaint_height = gr.Slider(label="inpaint height", minimum=64, maximum=2048, step=default["size_step"], value=512)code>

with gr.Row():

ad_use_steps = gr.Checkbox(label="Use separate steps")code>

ad_use_cfg_scale = gr.Checkbox(label="Use separate CFG scale")code>

with gr.Row():

ad_steps = gr.Slider(label="ADetailer steps", minimum=1, maximum=150, step=1, value=28)code>

ad_cfg_scale = gr.Slider(label="ADetailer CFG scale", minimum=0, maximum=30, step=0.5, value=7)code>

with gr.Row():

ad_use_checkpoint = gr.Checkbox(label="Use separate checkpoint")code>

ad_use_vae = gr.Checkbox(label="Use separate VAE")code>

with gr.Row():

ckpts = ["Use same checkpoint"]

for model in sd_models:

ckpts.append(model)

ad_checkpoint = gr.Dropdown(ckpts, label="ADetailer checkpoint", value=ckpts[0])code>

vaes = ["Use same VAE"]

for vae in sd_vaes:

vaes.append(vae)

ad_vae = gr.Dropdown(vaes, label="ADetailer VAE", value=vaes[0])code>

ad_use_sampler = gr.Checkbox(label="Use separate sampler")code>

with gr.Row():

ad_sampler = gr.Dropdown(samplers, label="ADetailer sampler", value=samplers[0])code>

scheduler_names = ["Use same scheduler"]

for scheduler in schedulers:

scheduler_names.append(scheduler)

ad_scheduler = gr.Dropdown(scheduler_names, label="ADetailer scheduler", value=scheduler_names[0])code>

with gr.Row():

ad_use_noise_multiplier = gr.Checkbox(label="Use separate noise multiplier")code>

ad_use_clip_skip = gr.Checkbox(label="Use separate CLIP skip")code>

with gr.Row():

ad_noise_multiplier = gr.Slider(label="Noise multiplier for img2img", minimum=0.5, maximum=1.5, step=0.01, value=1)code>

ad_clip_skip = gr.Slider(label="ADetailer CLIP skip", minimum=1, maximum=12, step=1, value=1)code>

ad_restore_face = gr.Checkbox(label="Restore faces after ADetailer")code>

if "sd-webui-controlnet" in extensions:

with gr.Tab("ControlNet"):

with gr.Row():

ad_cn_models = ["None", "Passthrough", "control_v11f1p_sd15_depth [1a8eb83c]", "control_v11p_sd15_inpaint [dfe64acb]", "control_v11p_sd15_lineart [2c3004a6]", "control_v11p_sd15_openpose [52e0ea54]", "control_v11p_sd15_scribble [46a6fcd7]", "control_v11p_sd15s2_lineart_anime [19a26aa8]"]

ad_controlnet_model = gr.Dropdown(ad_cn_models, label="ControlNet model", value="None")code>

ad_controlnet_module = gr.Dropdown(["None"], label="ControlNet module", value="None", visible=False)code>

ad_controlnet_model.change(fn= ad_update_cn_module_choices, inputs=ad_controlnet_model, outputs=ad_controlnet_module)

with gr.Row():

ad_controlnet_weight = gr.Slider(label="Control Weight", minimum=0, maximum=1, step=0.01, value=1)code>

ad_controlnet_guidance_start = gr.Slider(label="Starting Control Step", minimum=0, maximum=1, step=0.01, value=0)code>

ad_controlnet_guidance_end = gr.Slider(label="Ending Control Step", minimum=0, maximum=1, step=0.01, value=1)code>

if "sd-webui-controlnet" in extensions:

ad_inputs = [ad_gen_type, ad_num, enable_ad, ad_model, ad_prompt, ad_negative_prompt, ad_confidence, ad_mask_min_ratio, ad_mask_k_largest, ad_mask_max_ratio, ad_x_offset, ad_y_offset, ad_dilate_erode, ad_mask_merge_invert, ad_mask_blur, ad_denoising_strength, ad_inpaint_only_masked, ad_use_inpaint_width_height, ad_inpaint_only_masked_padding, ad_inpaint_width, ad_inpaint_height, ad_use_steps, ad_use_cfg_scale, ad_steps, ad_cfg_scale, ad_use_checkpoint, ad_use_vae, ad_checkpoint, ad_vae, ad_use_sampler, ad_sampler, ad_scheduler, ad_use_noise_multiplier, ad_use_clip_skip, ad_noise_multiplier, ad_clip_skip, ad_restore_face, ad_controlnet_model, ad_controlnet_module, ad_controlnet_weight, ad_controlnet_guidance_start, ad_controlnet_guidance_end]

else:

ad_inputs = [ad_gen_type, ad_num, enable_ad, ad_model, ad_prompt, ad_negative_prompt, ad_confidence, ad_mask_min_ratio, ad_mask_k_largest, ad_mask_max_ratio, ad_x_offset, ad_y_offset, ad_dilate_erode, ad_mask_merge_invert, ad_mask_blur, ad_denoising_strength, ad_inpaint_only_masked, ad_use_inpaint_width_height, ad_inpaint_only_masked_padding, ad_inpaint_width, ad_inpaint_height, ad_use_steps, ad_use_cfg_scale, ad_steps, ad_cfg_scale, ad_use_checkpoint, ad_use_vae, ad_checkpoint, ad_vae, ad_use_sampler, ad_sampler, ad_scheduler, ad_use_noise_multiplier, ad_use_clip_skip, ad_noise_multiplier, ad_clip_skip, ad_restore_face]

for gr_block in ad_inputs:

if type(gr_block) is gr.components.slider.Slider:

gr_block.release(fn=ad_update_args, inputs=ad_inputs, outputs=None)

else:

gr_block.change(fn=ad_update_args, inputs=ad_inputs, outputs=None)

return demo

def add_controlnet(payload, gen_type):

global cn_args

args = cn_args[gen_type]

args = dict(sorted(args.items(), key=lambda x: x[0]))

payload["alwayson_scripts"]["controlnet"] = {"args": []}

if args == {}:

return payload

for i in args:

payload["alwayson_scripts"]["controlnet"]["args"].append(args[i])

return payload

def cn_preprocess(cn_module, cn_input_image):

if cn_input_image is None:

return None

cn_input_image = pil_to_base64(cn_input_image)

payload = {

"controlnet_module": cn_module,

"controlnet_input_images": [cn_input_image]

}

response = requests.post(url=f"{url}/controlnet/detect", json=payload)

images_base64 = response.json()["images"][0]

image_pil = base64_to_pil(images_base64)

if save_images == "Yes":

save_image(image_pil, "ControlNet", "detect")

return image_pil

def cn_update_args(*args):

cn_gen_type, cn_num, enable_cn, enable_low_vram, enable_pixel_perfect, cn_module, cn_model, cn_input_image, cn_mask, cn_weight, cn_guidance_start, cn_guidance_end, cn_resolution, cn_control_mode, cn_resize_mode = args

global cn_args

if not cn_input_image is None:

cn_input_image = pil_to_base64(cn_input_image)

if not cn_mask is None:

cn_mask = pil_to_base64(cn_mask)

args = {

"input_image": cn_input_image,

"module": cn_module,

"model": cn_model,

"low_vram": enable_low_vram,

"pixel_perfect": enable_pixel_perfect,

"mask": cn_mask,

"weight": cn_weight,

"guidance_start": cn_guidance_start,

"guidance_end": cn_guidance_end,

"processor_res": cn_resolution,

"control_mode": cn_control_mode,

"resize_mode": cn_resize_mode

}

if enable_cn == True:

cn_args[cn_gen_type][cn_num] = args

else:

del cn_args[cn_gen_type][cn_num]

def cn_update_choices(cn_type):

module_list = cn_types_list[cn_type]["module_list"]

model_list = cn_types_list[cn_type]["model_list"]

default_option = cn_types_list[cn_type]["default_option"]

default_model = cn_types_list[cn_type]["default_model"]

return gr.update(choices=module_list, value=default_option), gr.update(choices=model_list, value=default_model)

def cn_blocks(i, gen_type):

with gr.Blocks() as demo:

with gr.Row():

cn_gen_type = gr.Textbox(visible=False, value=gen_type)

cn_num = gr.Textbox(visible=False, value=i)

enable_cn = gr.Checkbox(label="Enable")code>

enable_low_vram = gr.Checkbox(label="Low VRAM")code>

enable_pixel_perfect = gr.Checkbox(label="Pixel Perfect")code>

enable_mask_upload = gr.Checkbox(label="Effective Region Mask")code>

with gr.Row():

cn_type = gr.Dropdown(cn_types, label="ControlNet type", value=cn_default_type)code>

cn_btn = gr.Button("Preprocess | 预处理", elem_id="button")code>

with gr.Row():

cn_module = gr.Dropdown(cn_module_list, label="ControlNet module", value=cn_default_option)code>

cn_model = gr.Dropdown(cn_model_list, label="ControlNet model", value=cn_default_model)code>

with gr.Row():

cn_input_image = gr.Image(type="pil")code>

cn_detect_image = gr.Image(label="Preprocessor Preview")code>

cn_mask = gr.Image(label="Effective Region Mask", interactive=True, visible=False)code>

with gr.Row():

cn_weight = gr.Slider(label="Control Weight", minimum=0, maximum=2, step=0.05, value=1)code>

cn_guidance_start = gr.Slider(label="Starting Control Step", minimum=0, maximum=1, step=0.01, value=0)code>

cn_guidance_end = gr.Slider(label="Ending Control Step", minimum=0, maximum=1, step=0.01, value=1)code>

cn_resolution = gr.Slider(label="Resolution", minimum=64, maximum=2048, step=default["size_step"], value=512)code>

cn_control_mode = gr.Radio(["Balanced", "My prompt is more important", "ControlNet is more important"], label="Control Mode", value="Balanced")code>

cn_resize_mode = gr.Radio(["Just Resize", "Crop and Resize", "Resize and Fill"], label="Resize Mode", value="Crop and Resize")code>

enable_mask_upload.change(fn=gr_update_visible, inputs=enable_mask_upload, outputs=cn_mask)

cn_type.change(fn=cn_update_choices, inputs=cn_type, outputs=[cn_module, cn_model])

cn_btn.click(fn=cn_preprocess, inputs=[cn_module, cn_input_image], outputs=cn_detect_image)

cn_inputs = [cn_gen_type, cn_num, enable_cn, enable_low_vram, enable_pixel_perfect, cn_module, cn_model, cn_input_image, cn_mask, cn_weight, cn_guidance_start, cn_guidance_end, cn_resolution, cn_control_mode, cn_resize_mode]

for gr_block in cn_inputs:

if type(gr_block) is gr.components.slider.Slider:

gr_block.release(fn=cn_update_args, inputs=cn_inputs, outputs=None)

else:

gr_block.change(fn=cn_update_args, inputs=cn_inputs, outputs=None)

return demo

def generate(input_image, sd_model, sd_vae, sampler_name, scheduler, clip_skip, steps, width, batch_size, height, batch_count, cfg_scale, randn_source, seed, denoising_strength, prompt, negative_prompt, progress=gr.Progress()):

global interrupt, xyz_args

interrupt = False

if denoising_strength >= 0:

gen_type = "img2img"

if input_image is None:

return None, None, None

else:

gen_type = "txt2img"

progress(0, desc=f"Loading {sd_model}")

payload = {

"sd_model_checkpoint": sd_models_list[sd_model],

"sd_vae": sd_vae,

"CLIP_stop_at_last_layers": clip_skip,

"randn_source": randn_source

}

requests.post(url=f"{url}/sdapi/v1/options", json=payload)

if interrupt == True:

return None, None, None

progress(0, desc="Processing...")code>

images = []

images_info = []

if not input_image is None:

input_image = pil_to_base64(input_image)

for i in range(batch_count):

payload = {

"prompt": prompt,

"negative_prompt": negative_prompt,

"batch_size": batch_size,

"seed": seed,

"sampler_name": sampler_name,

"scheduler": scheduler,

"steps": steps,

"cfg_scale": cfg_scale,

"width": width,

"height": height,

"init_images": [input_image],

"denoising_strength": denoising_strength,

"alwayson_scripts": {}

}

if "adetailer" in extensions:

payload = add_adetailer(payload, gen_type)

if "sd-webui-controlnet" in extensions:

payload = add_controlnet(payload, gen_type)

payload = add_xyz_plot(payload, gen_type)

response = requests.post(url=f"{url}/sdapi/v1/{gen_type}", json=payload)

images_base64 = response.json()["images"]

for j in range(len(images_base64)):

image_pil = base64_to_pil(images_base64[j])

images.append(image_pil)

image_info = get_png_info(image_pil)

images_info.append(image_info)

if image_info == "None":

if save_images == "Yes":

if gen_type in xyz_args:

save_image(image_pil, "XYZ_Plot", "grid")

else:

save_image(image_pil, "ControlNet", "detect")

else:

seed = re.findall("Seed: [0-9]+", image_info)[0].split(": ")[-1]

if save_images == "Yes":

save_image(image_pil, sd_model, seed)

seed = int(seed) + 1

progress((i+1)/batch_count, desc=f"Batch count: {(i+1)}/{batch_count}")

if interrupt == True:

return images, images_info, datetime.datetime.now()

return images, images_info, datetime.datetime.now()

def gen_clear_geninfo():

return None

def gen_update_geninfo(images_info):

if images_info == [] or images_info is None:

return None

return images_info[0]

def gen_update_selected_geninfo(images_info, evt: gr.SelectData):

return images_info[evt.index]

def gen_blocks(gen_type):

with gr.Blocks() as demo:

with gr.Row():

with gr.Column():

prompt = gr.Textbox(placeholder="Prompt", show_label=False, value=default["prompt"], lines=3)code>

negative_prompt = gr.Textbox(placeholder="Negative prompt", show_label=False, value=default["negative_prompt"], lines=3)code>

if gen_type == "txt2img":

input_image = gr.Image(visible=False)

else:

input_image = gr.Image(type="pil")code>

with gr.Tab("Generation"):

with gr.Row():

sd_model = gr.Dropdown(sd_models, label="SD Model", value=sd_models[0])code>

sd_vae = gr.Dropdown(sd_vaes, label="SD VAE", value=sd_vaes[0])code>

clip_skip = gr.Slider(minimum=1, maximum=12, step=1, label="Clip skip", value=default["clip_skip"])code>

with gr.Row():

sampler_name = gr.Dropdown(samplers, label="Sampling method", value=samplers[0])code>

scheduler = gr.Dropdown(schedulers, label="Schedule type", value=schedulers[0])code>

steps = gr.Slider(minimum=1, maximum=100, step=1, label="Sampling steps", value=default["steps"])code>

with gr.Row():

width = gr.Slider(minimum=64, maximum=2048, step=default["size_step"], label="Width", value=default["width"])code>

batch_size = gr.Slider(minimum=1, maximum=8, step=1, label="Batch size", value=1)code>

with gr.Row():

height = gr.Slider(minimum=64, maximum=2048, step=default["size_step"], label="Height", value=default["height"])code>

batch_count = gr.Slider(minimum=1, maximum=100, step=1, label="Batch count", value=1)code>

with gr.Row():

cfg_scale = gr.Slider(minimum=1, maximum=30, step=0.5, label="CFG Scale", value=default["cfg"])code>

if gen_type == "txt2img":

denoising_strength = gr.Slider(minimum=-1, maximum=1, step=1, value=-1, visible=False)

else:

denoising_strength = gr.Slider(minimum=0.0, maximum=1.0, step=0.01, label="Denoising strength", value=0.7)code>

with gr.Row():

randn_source = gr.Dropdown(["CPU", "GPU"], label="RNG", value="CPU")code>

seed = gr.Textbox(label="Seed", value=-1)code>

if "adetailer" in extensions:

with gr.Tab("ADetailer"):

if gen_type == "img2img":

with gr.Row():

ad_skip_img2img = gr.Checkbox(label="Skip img2img", visible=True)code>

ad_skip_img2img.change(fn=ad_update_skip_img2img, inputs=ad_skip_img2img, outputs=None)

for i in range(default["ad_nums"]):

with gr.Tab(f"ADetailer {ordinal(i + 1)}"): ad_blocks(i, gen_type)

if "sd-webui-controlnet" in extensions:

with gr.Tab("ControlNet"):

for i in range(default["cn_nums"]):

with gr.Tab(f"ControlNet Unit {i}"): cn_blocks(i, gen_type)

if not loras == [] or not embeddings == []:

with gr.Tab("Extra Networks"):

if not loras == []:

lora = gr.Dropdown(loras, label="Lora", multiselect=True, interactive=True)code>

lora.change(fn=add_lora, inputs=[prompt, lora], outputs=prompt)

if not embeddings == []:

embedding = gr.Dropdown(embeddings, label="Embedding", multiselect=True, interactive=True)code>

embedding.change(fn=add_embedding, inputs=[negative_prompt, embedding], outputs=negative_prompt)

with gr.Tab("X/Y/Z plot"): xyz_blocks(gen_type)

with gr.Column():

with gr.Row():

btn = gr.Button("Generate | 生成", elem_id="button")code>

btn2 = gr.Button("Interrupt | 终止")

gallery = gr.Gallery(preview=True, height=default["gallery_height"])

image_geninfo = gr.Markdown()

images_geninfo = gr.State()

update_geninfo = gr.Textbox(visible=False)

gen_inputs = [input_image, sd_model, sd_vae, sampler_name, scheduler, clip_skip, steps, width, batch_size, height, batch_count, cfg_scale, randn_source, seed, denoising_strength, prompt, negative_prompt]

btn.click(fn=gen_clear_geninfo, inputs=None, outputs=image_geninfo)

btn.click(fn=generate, inputs=gen_inputs, outputs=[gallery, images_geninfo, update_geninfo])

btn2.click(fn=post_interrupt, inputs=None, outputs=None)

gallery.select(fn=gen_update_selected_geninfo, inputs=images_geninfo, outputs=image_geninfo)

update_geninfo.change(fn=gen_update_geninfo, inputs=images_geninfo, outputs=image_geninfo)

return demo

def extras(input_image, upscaler_1, upscaler_2, upscaling_resize, extras_upscaler_2_visibility, enable_gfpgan, gfpgan_visibility, enable_codeformer, codeformer_visibility, codeformer_weight):

if input_image is None:

return None

input_image = pil_to_base64(input_image)

if enable_gfpgan == False:

gfpgan_visibility = 0

if enable_codeformer == False:

codeformer_visibility = 0

payload = {

"gfpgan_visibility": gfpgan_visibility,

"codeformer_visibility": codeformer_visibility,

"codeformer_weight": codeformer_weight,

"upscaling_resize": upscaling_resize,

"upscaler_1": upscaler_1,

"upscaler_2": upscaler_2,

"extras_upscaler_2_visibility": extras_upscaler_2_visibility,

"image": input_image

}

response = requests.post(url=f"{url}/sdapi/v1/extra-single-image", json=payload)

images_base64 = response.json()["image"]

image_pil = base64_to_pil(images_base64)

if save_images == "Yes":

save_image(image_pil, "Extras", "image")

return image_pil

def extras_blocks():

with gr.Blocks() as demo:

with gr.Row():

with gr.Column():

input_image = gr.Image(type="pil")code>

with gr.Row():

upscaler_1 = gr.Dropdown(upscalers, label="Upscaler 1", value="R-ESRGAN 4x+")code>

upscaler_2 = gr.Dropdown(upscalers, label="Upscaler 2", value="None")code>

with gr.Row():

upscaling_resize = gr.Slider(minimum=1, maximum=8, step=0.05, label="Scale by", value=4)code>

extras_upscaler_2_visibility = gr.Slider(minimum=0, maximum=1, step=0.001, label="Upscaler 2 visibility", value=0)code>

enable_gfpgan = gr.Checkbox(label="Enable GFPGAN")code>

gfpgan_visibility = gr.Slider(minimum=0, maximum=1, step=0.001, label="GFPGAN Visibility", value=1)code>

enable_codeformer = gr.Checkbox(label="Enable CodeFormer")code>

codeformer_visibility = gr.Slider(minimum=0, maximum=1, step=0.001, label="CodeFormer Visibility", value=1)code>

codeformer_weight = gr.Slider(minimum=0, maximum=1, step=0.001, label="Weight (0 = maximum effect, 1 = minimum effect)", value=0)code>

with gr.Column():

with gr.Row():

btn = gr.Button("Generate | 生成", elem_id="button")code>

btn2 = gr.Button("Interrupt | 终止")

extra_image = gr.Image(label="Extras image")code>

btn.click(fn=extras, inputs=[input_image, upscaler_1, upscaler_2, upscaling_resize, extras_upscaler_2_visibility, enable_gfpgan, gfpgan_visibility, enable_codeformer, codeformer_visibility, codeformer_weight], outputs=extra_image)

btn2.click(fn=post_interrupt, inputs=None, outputs=None)

return demo

def get_png_info(image_pil):

image_info=[]

if image_pil is None:

return None

for key, value in image_pil.info.items():

image_info.append(value)

if not image_info == []:

image_info = image_info[0]

image_info = re.sub(r"<", "\<", image_info)

image_info = re.sub(r">", "\>", image_info)

image_info = re.sub(r"\n", "<br>", image_info)

else:

image_info = "None"

return image_info

def png_info_blocks():

with gr.Blocks() as demo:

with gr.Row():

with gr.Column():

input_image = gr.Image(value=None, type="pil")code>

with gr.Column():

png_info = gr.Markdown()

input_image.change(fn=get_png_info, inputs=input_image, outputs=png_info)

return demo

with gr.Blocks(css="#button {background: #FFE1C0; color: #FF453A} .block.padded:not(.gradio-accordion) {padding: 0 !important;} div.form {border-width: 0; box-shadow: none; background: white; gap: 0.5em;}") as demo:code>

with gr.Tab("txt2img"): gen_blocks("txt2img")

with gr.Tab("img2img"): gen_blocks("img2img")

with gr.Tab("Extras"): extras_blocks()

with gr.Tab("PNG Info"): png_info_blocks()

demo.queue(concurrency_count=100).launch(inbrowser=True)

声明

本文内容仅代表作者观点,或转载于其他网站,本站不以此文作为商业用途

如有涉及侵权,请联系本站进行删除

转载本站原创文章,请注明来源及作者。