本地搭建ChatTTS WebUi

广君有点高 2024-06-13 13:03:03 阅读 90

声明

声明:本教程基于modelscope.cn的演示站进行本地搭建,环境为Windows

作者GitHub地址:https://github.com/2noise/ChatTTS

Webui体验地址:https://modelscope.cn/studios/AI-ModelScope/ChatTTS-demo/summary

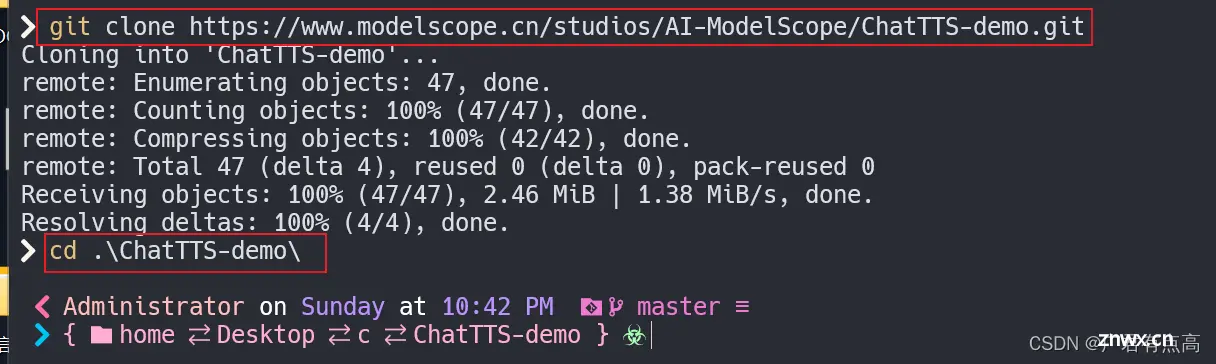

第一步 克隆代码

先在终端输入以下内容,克隆modelscope的文件到本地

git clone https://www.modelscope.cn/studios/AI-ModelScope/ChatTTS-demo.git

克隆好之后进入文件目录

到了目录之后直接执行安装txt中的内容太慢了,换成国内源很快就能下好

pip install -r requirements.txt -i https://pypi.tuna.tsinghua.edu.cn/simple

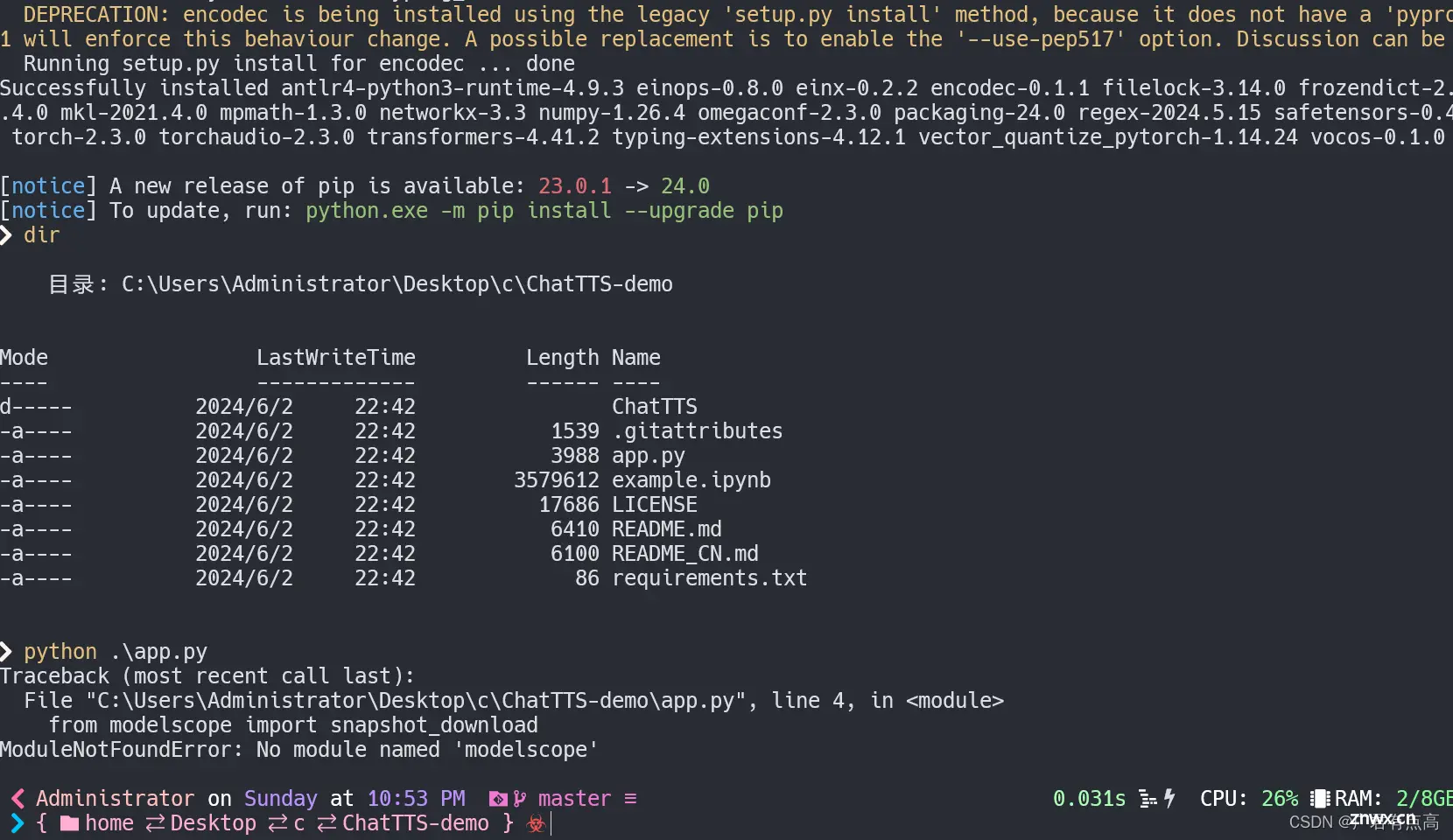

第二步 安装库

下好之后也不能立马执行,需要在手动安装一些库

依此执行以下命令

pip install modelscope -i https://pypi.tuna.tsinghua.edu.cn/simplepip install gradio -i https://pypi.tuna.tsinghua.edu.cn/simple

上面安装没有问题,执行以下代码

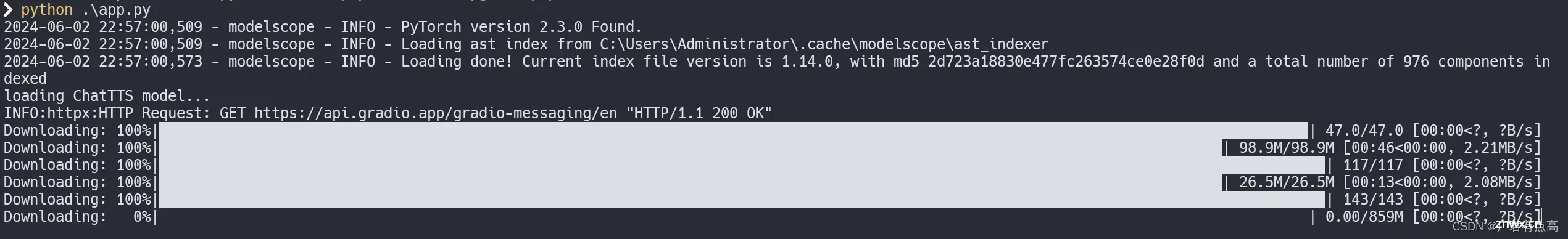

python app.py

启动等待程序下载完成(白条可能会卡住不动,因为他是一次显示很多的,看一下网络宽带有占用就行了,不要暂停程序!)

错误解决

我就遇到一个错误,说什么Windows不支持,然后我根据错误修改了一些代码我就能运行了,由于我第一次搭建是拿物理电脑搭建的,解决运行之后想着写一篇文章,然后用虚拟机继续搭建,遇到的错误又不一样了,烦死了,于是我直接把我物理机的core.py中的内容复制到虚拟机中的core.py就能运行了,直接复制以下代码覆盖克隆下来的core.py

core.py在ChatTTS目录下面

import osimport loggingfrom omegaconf import OmegaConfimport platformimport torchfrom vocos import Vocosfrom .model.dvae import DVAEfrom .model.gpt import GPT_warpperfrom .utils.gpu_utils import select_devicefrom .utils.infer_utils import count_invalid_characters, detect_languagefrom .utils.io_utils import get_latest_modified_filefrom .infer.api import refine_text, infer_codefrom huggingface_hub import snapshot_downloadlogging.basicConfig(level = logging.INFO)class Chat: def __init__(self, ): self.pretrain_models = {} self.normalizer = {} self.logger = logging.getLogger(__name__) def check_model(self, level = logging.INFO, use_decoder = False): not_finish = False check_list = ['vocos', 'gpt', 'tokenizer'] if use_decoder: check_list.append('decoder') else: check_list.append('dvae') for module in check_list: if module not in self.pretrain_models: self.logger.log(logging.WARNING, f'{module} not initialized.') not_finish = True if not not_finish: self.logger.log(level, f'All initialized.') return not not_finish def load_models(self, source='huggingface', force_redownload=False, local_path='<LOCAL_PATH>', **kwargs): if source == 'huggingface': hf_home = os.getenv('HF_HOME', os.path.expanduser("~/.cache/huggingface")) try: download_path = get_latest_modified_file(os.path.join(hf_home, 'hub/models--2Noise--ChatTTS/snapshots')) except: download_path = None if download_path is None or force_redownload: self.logger.log(logging.INFO, f'Download from HF: https://huggingface.co/2Noise/ChatTTS') download_path = snapshot_download(repo_id="2Noise/ChatTTS", allow_patterns=["*.pt", "*.yaml"]) else: self.logger.log(logging.INFO, f'Load from cache: {download_path}') elif source == 'local': self.logger.log(logging.INFO, f'Load from local: {local_path}') download_path = local_path self._load(**{k: os.path.join(download_path, v) for k, v in OmegaConf.load(os.path.join(download_path, 'config', 'path.yaml')).items()}, **kwargs) def _load( self, vocos_config_path: str = None, vocos_ckpt_path: str = None, dvae_config_path: str = None, dvae_ckpt_path: str = None, gpt_config_path: str = None, gpt_ckpt_path: str = None, decoder_config_path: str = None, decoder_ckpt_path: str = None, tokenizer_path: str = None, device: str = None, compile: bool = True, ): if not device: device = select_device(4096) self.logger.log(logging.INFO, f'use {device}') if vocos_config_path: vocos = Vocos.from_hparams(vocos_config_path).to(device).eval() assert vocos_ckpt_path, 'vocos_ckpt_path should not be None' vocos.load_state_dict(torch.load(vocos_ckpt_path)) self.pretrain_models['vocos'] = vocos self.logger.log(logging.INFO, 'vocos loaded.') if dvae_config_path: cfg = OmegaConf.load(dvae_config_path) dvae = DVAE(**cfg).to(device).eval() assert dvae_ckpt_path, 'dvae_ckpt_path should not be None' dvae.load_state_dict(torch.load(dvae_ckpt_path, map_location='cpu')) self.pretrain_models['dvae'] = dvae self.logger.log(logging.INFO, 'dvae loaded.') if gpt_config_path: cfg = OmegaConf.load(gpt_config_path) gpt = GPT_warpper(**cfg).to(device).eval() assert gpt_ckpt_path, 'gpt_ckpt_path should not be None' gpt.load_state_dict(torch.load(gpt_ckpt_path, map_location='cpu')) if platform.system() != 'Windows': gpt.gpt.forward = torch.compile(gpt.gpt.forward, backend='inductor', dynamic=True) self.pretrain_models['gpt'] = gpt spk_stat_path = os.path.join(os.path.dirname(gpt_ckpt_path), 'spk_stat.pt') assert os.path.exists(spk_stat_path), f'Missing spk_stat.pt: {spk_stat_path}' self.pretrain_models['spk_stat'] = torch.load(spk_stat_path).to(device) self.logger.log(logging.INFO, 'gpt loaded.') if decoder_config_path: cfg = OmegaConf.load(decoder_config_path) decoder = DVAE(**cfg).to(device).eval() assert decoder_ckpt_path, 'decoder_ckpt_path should not be None' decoder.load_state_dict(torch.load(decoder_ckpt_path, map_location='cpu')) self.pretrain_models['decoder'] = decoder self.logger.log(logging.INFO, 'decoder loaded.') if tokenizer_path: tokenizer = torch.load(tokenizer_path, map_location='cpu') tokenizer.padding_side = 'left' self.pretrain_models['tokenizer'] = tokenizer self.logger.log(logging.INFO, 'tokenizer loaded.') self.check_model() def infer( self, text, skip_refine_text=False, refine_text_only=False, params_refine_text={}, params_infer_code={'prompt':'[speed_5]'}, use_decoder=True, do_text_normalization=False, lang=None, ): assert self.check_model(use_decoder=use_decoder) if not isinstance(text, list): text = [text] if do_text_normalization: for i, t in enumerate(text): _lang = detect_language(t) if lang is None else lang self.init_normalizer(_lang) text[i] = self.normalizer[_lang].normalize(t, verbose=False, punct_post_process=True) for i in text: invalid_characters = count_invalid_characters(i) if len(invalid_characters): self.logger.log(logging.WARNING, f'Invalid characters found! : {invalid_characters}') if not skip_refine_text: text_tokens = refine_text(self.pretrain_models, text, **params_refine_text)['ids'] text_tokens = [i[i < self.pretrain_models['tokenizer'].convert_tokens_to_ids('[break_0]')] for i in text_tokens] text = self.pretrain_models['tokenizer'].batch_decode(text_tokens) if refine_text_only: return text text = [params_infer_code.get('prompt', '') + i for i in text] params_infer_code.pop('prompt', '') result = infer_code(self.pretrain_models, text, **params_infer_code, return_hidden=use_decoder) if use_decoder: mel_spec = [self.pretrain_models['decoder'](i[None].permute(0,2,1)) for i in result['hiddens']] else: mel_spec = [self.pretrain_models['dvae'](i[None].permute(0,2,1)) for i in result['ids']] wav = [self.pretrain_models['vocos'].decode(i).cpu().numpy() for i in mel_spec] return wav def sample_random_speaker(self, ): dim = self.pretrain_models['gpt'].gpt.layers[0].mlp.gate_proj.in_features std, mean = self.pretrain_models['spk_stat'].chunk(2) return torch.randn(dim, device=std.device) * std + mean def init_normalizer(self, lang): if lang not in self.normalizer: from nemo_text_processing.text_normalization.normalize import Normalizer self.normalizer[lang] = Normalizer(input_case='cased', lang=lang)

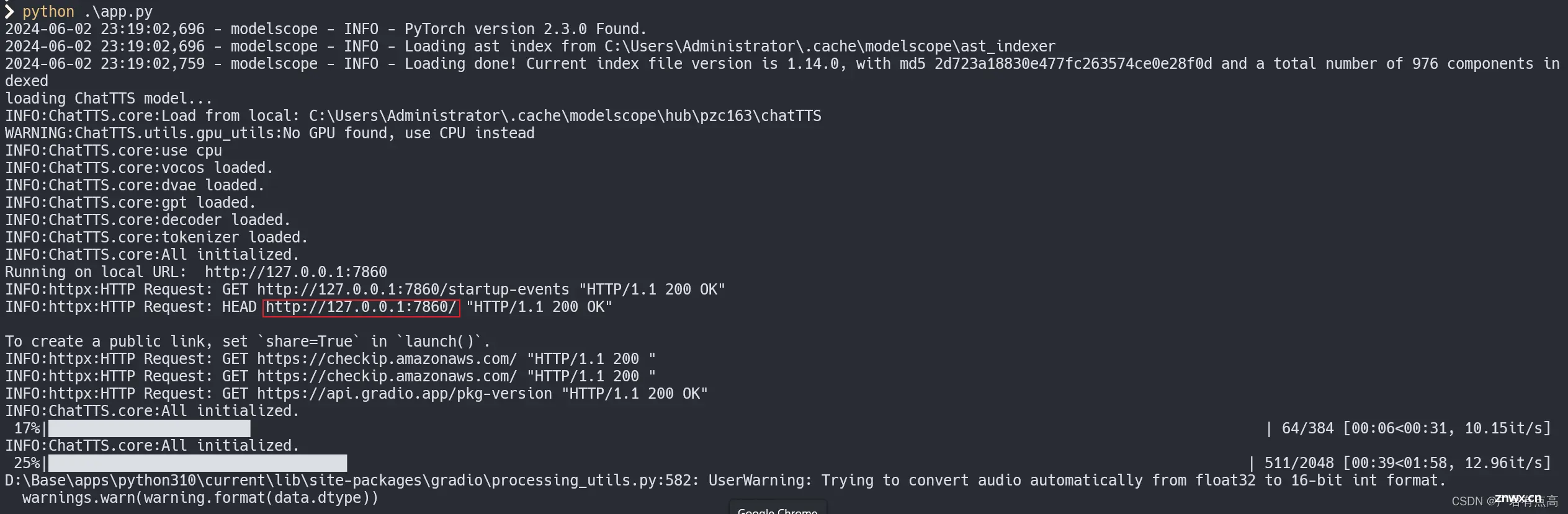

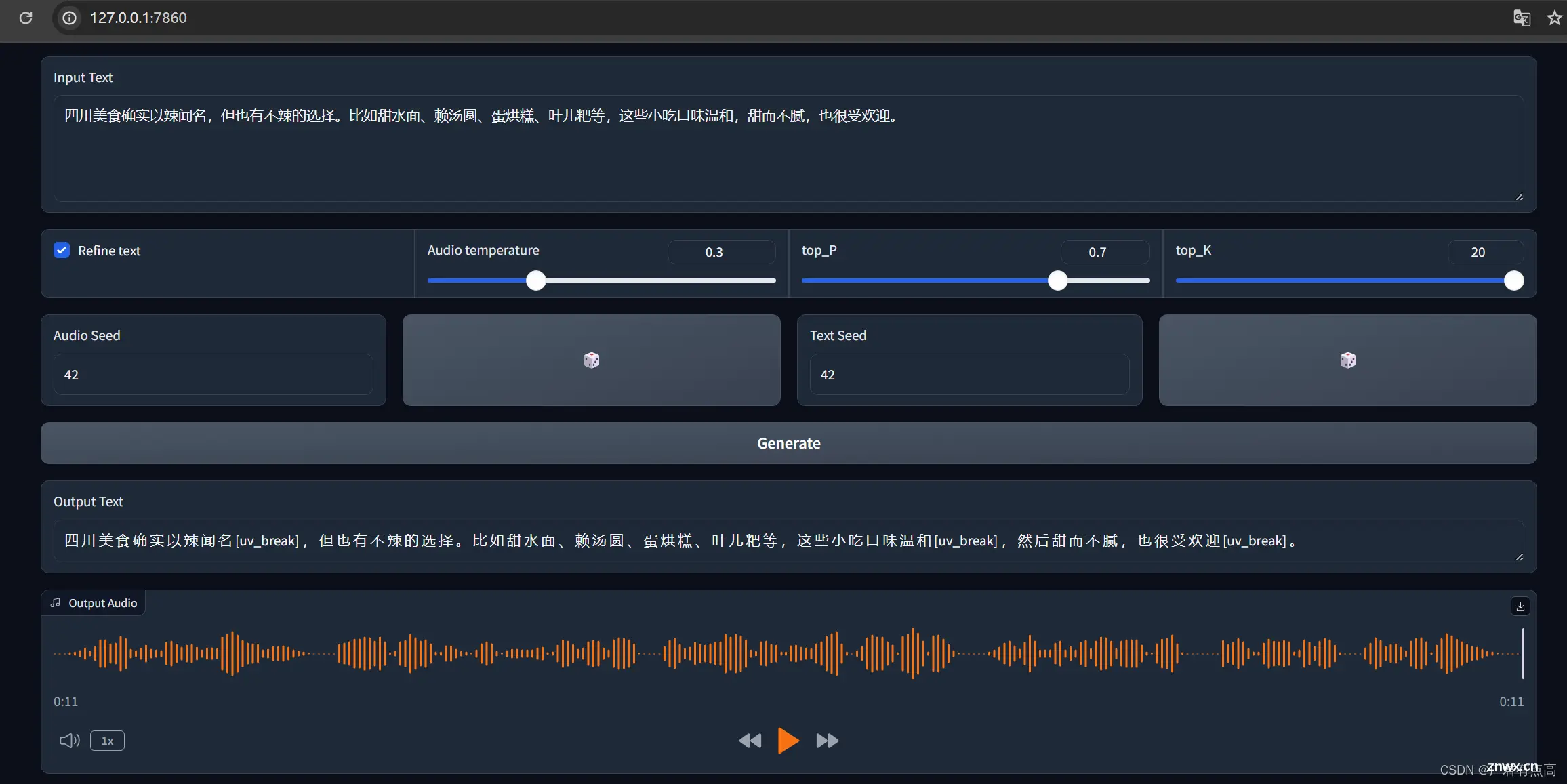

运行结果

执行app.py之后会得到一个地址,访问即可

访问就可以生成了

上一篇: H5获取手机相机或相册图片两种方式-Android通过webview传递多张照片给H5

下一篇: 东方通TongWeb(外置容器)部署spring boot项目(jar包改war包)

本文标签

声明

本文内容仅代表作者观点,或转载于其他网站,本站不以此文作为商业用途

如有涉及侵权,请联系本站进行删除

转载本站原创文章,请注明来源及作者。